Why Reflection 70B Is Not Much Better Than GPT-4o and LLama 3.1–70B: Here Are the Results

Reflection 70B is a new large language model (LLM) based on Meta’s open-source Llama 3.1–70B Instruct, which leverages a new error self-correction technology and performs well in third-party benchmark

Welcome to the world of AI, where I present you with the latest content focused on developers and AI technology enthusiasts. I help you understand technology trends and innovative products

So, In this Article, we’re diving into some of the latest AI developments from major players like llama3.1–70 Billion, GPT-4o, and Reflection 70 Billion.

The model Reflection70B, created by a new startup Team, HyperWrite, was born. It surpassed top commercial models such as GPT-4 in the GSM8K mathematical test with an astonishing 99.2% accuracy rate, instantly detonating the entire AI circle.

How did this model, built by a small entrepreneurial Team called HyperWrite, complete training in just three weeks and achieve such excellent performance?

In this story, we will cover what Reflection 70 Billion is, What makes Reflection 70B unique, how Reflection 70B works, how I can use Reflection 70B, and Why Reflection 70B Is So Much Better Than GPT-4o And LLama 3.1–70B. Let’s uncover the secrets of Reflection70B together.

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

like my article; that will really help me out.👏

Follow me on my YouTube channel

Subscribe to me to get the latest article.

What is Reflection 70 Billion?

Reflection 70B is a new large language model (LLM) based on Meta’s open-source Llama 3.1–70B Instruct, which leverages a new error self-correction technology and performs well in third-party benchmarks.

Reflection 70B has been rigorously tested on multiple benchmarks, including MMLU and HumanEval, and uses LMSys’ LLM decontaminator to ensure that the test results are not contaminated.

The release of Reflection 70B marks a major milestone for open-source AI. It gives developers and researchers access to a powerful tool that rivals proprietary models.

What makes Reflection 70B Unique?

A major feature of Reflection 70B is its error identification and correction capabilities. Through a “reflection tuning” technology, the model can reflect on the generated content, detect errors in its reasoning, evaluate the accuracy, and then make corrections and Output the results to the user.

The model introduces several new tokens for reasoning and correcting errors. During the reasoning process, the model will first output its reasoning in special tags and can make immediate corrections when errors are detected.

Another key to the success of Reflection 70B is the use of technology from the data generation company “Glaive”, which can quickly generate high-quality synthetic data for training Reflection 70B, speeding up the model development process.

Benchmark

The Reflection70B was benchmarked for performance, with contamination checks performed using LMSys’ LLM Decontaminator.

Other data do not influence the test results, and the benchmark is based on reliable data. Of particular note is that the benchmark is limited to the <output> tag. It allows for an accurate evaluation of the answer that Reflection70B finally outputs.

GPQA: A benchmark that shows the model’s performance on general knowledge queries. Reflection 70B has an accuracy rate of 55.3%, and since it is “0-shot Reflection”, it measures the model’s inference ability without prior information. Compared to other models, Claude 3.5 Sonnet is slightly higher at 59.4%, but different models also have results of around 50%.

MMLU: A benchmark that tests multi-task learning, measuring the model’s understanding of problems in multiple fields. Reflection 70B has an accuracy rate of 89.9%, which is a very high performance compared to other models. Claude 3.5 Sonnet and GPT-4o also have high scores, but Reflection 70B is slightly better.

HumanEval: A benchmark that measures how accurately a model can answer programming problems in a code generation task. Reflection 70B achieved a score of 91%, which is almost the same as Claude 3.5 Sonnet’s 92%.

MATH: Measures performance on mathematics problems. Reflection 70B has an accuracy rate of 79.7%, which is also excellent compared to other models.

GSM8K: A benchmark that evaluates the ability to solve mathematical problems. Reflection 70B shows a very high score of 99.2%. Other models also show high results, but Reflection 70B shows the best results.

IFEval: This benchmark evaluates the model’s general inference ability. Reflection 70B shows an accuracy rate of 90.13%, which is also high compared to other models.

how Reflection 70B works

Human thought process

Reflection-tuning is a technique that improves the LLM’s ability to recognize and correct its errors in the reasoning process, but humans also unconsciously engage in a similar thought process.

For example, say you are solving a math problem.

Problem

2 + 2 × 7 = ?

General thought process

Initial reasoning : 2 + 2 = 4, 4 × 7 = 28

Error recognition: You now realize that you have done the calculations in the wrong order. Remember that multiplication has a higher priority than addition.

Inference correction : 2 × 7 = 14, 14 + 2 = 16

Final answer : 2 + 2 × 7 = 16

Reflection-Tuning has the potential to help LLMs acquire more human-like thought processes.

How to Use Reflection 70B Locally :

As usual, the best way to run the inference on any model locally is to run Ollama. So let’s set up Ollama first.

Download Ollama here (it should walk you through the rest of these steps)

Open a terminal and run

ollama run reflection:70bIt will start downloading the models and the manifest files to a hidden folder

Reflection 70 B Vs GPT-4o Vs LLama 3.1–70B

Let’s compare Reflection 70 B and GPT-4o and verify how much performance LLama 3.1–70B has.

Many people said Reflection 70 B was fine-tuned based on Llama3.1, significantly improving performance. Still, there is some suspicion that it may actually be a fine-tuned version of Llama 3 rather than Llama 3.1.

Well, seeing is believing, so I decided to give it a try.

This time, we will verify the following items.

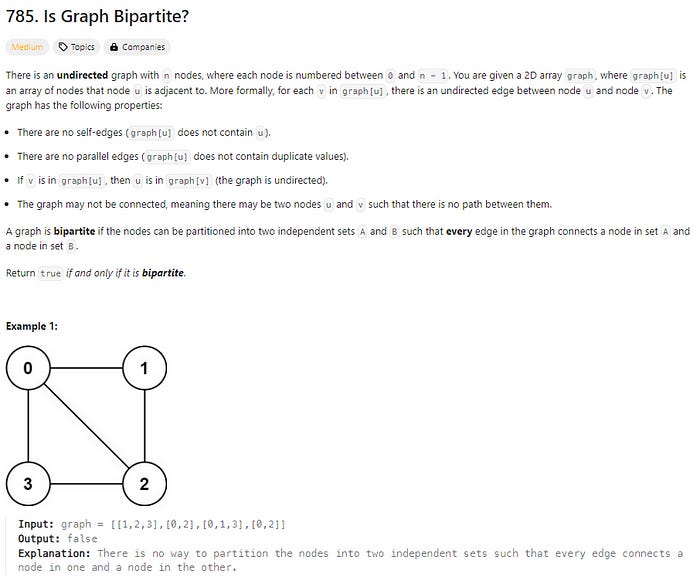

Coding

Graph algorithms have recently drawn the attention of many of you, so I decided to test them out. I went to the LeetCode platform and selected a medium-level problem related to graph algorithms.

Reflection 70 B

from collections import deque

def isBipartite(graph):

n = len(graph)

colors = [-1] * n

def bfs(node):

queue = deque([node])

colors[node] = 0

while queue:

node = queue.popleft()

for neighbor in graph[node]:

if colors[neighbor] == -1:

colors[neighbor] = 1 - colors[node]

queue.append(neighbor)

elif colors[neighbor] == colors[node]:

return False

return True

for i in range(n):

if colors[i] == -1 and not bfs(i):

return False

return True

# Example usage:

# Test with example 1

graph1 = [[1,2,3],[0,2],[0,1,3],[0,2]]

print(isBipartite(graph1)) # Output: False

# Test with example 2

graph2 = [[1,3],[0,2],[1,3],[0,2]]

print(isBipartite(graph2)) # Output: True

GPT-4o

python

Copy code

from collections import deque

def isBipartite(graph):

# Store the color of each node, -1 means uncolored

color = [-1] * len(graph)

# Check each connected component

for i in range(len(graph)):

if color[i] == -1: # If the node is uncolored

# Start BFS

queue = deque([i])

color[i] = 0 # Color the starting node with color 0

while queue:

node = queue.popleft()

for neighbor in graph[node]:

if color[neighbor] == -1: # If the neighbor is uncolored

# Assign the opposite color to the neighbor

color[neighbor] = 1 - color[node]

queue.append(neighbor)

elif color[neighbor] == color[node]: # If the neighbor has the same color

return False # The graph is not bipartite

return True # If all nodes are colored successfully

# Example 1

graph1 = [[1,2,3],[0,2],[0,1,3],[0,2]]

print(isBipartite(graph1)) # Output: False

# Example 2

graph2 = [[1,3],[0,2],[1,3],[0,2]]

print(isBipartite(graph2)) # Output: True

LLama 3.1–70B

Here is a solution in Python using Breadth-First Search (BFS) and graph coloring:

from collections import deque

def isBipartite(graph):

"""

Determine if an undirected graph is bipartite.

Args:

graph (list of lists): Adjacency list representation of the graph.

Returns:

bool: True if the graph is bipartite, False otherwise.

"""

n = len(graph)

colors = [-1] * n # Initialize node colors to -1 (unvisited)

for i in range(n):

if colors[i] == -1: # If node is unvisited

queue = deque([i])

colors[i] = 0 # Color node 0 (arbitrary choice)

while queue:

node = queue.popleft()

for neighbor in graph[node]:

if colors[neighbor] == -1: # If neighbor is unvisited

colors[neighbor] = 1 - colors[node] # Color neighbor with opposite color

queue.append(neighbor)

elif colors[neighbor] == colors[node]: # If neighbor has same color as node

return False # Graph is not bipartite

return True # Graph is bipartite

Example Use Cases:

print(isBipartite([[1,2,3],[0,2],[0,1,3],[0,2]])) # False

print(isBipartite([[1,3],[0,2],[1,3],[0,2]])) # True

As we see in the result, GPT-4o and LLama 3.1–70B are better choices because they have a clean structure, fewer nested functions, and use BFS efficiently. LLama 3.1–70B also includes docstrings, which makes the code easier to read and maintain. In terms of performance, GPT-4o and LLama 3.1–70B are faster than Reflection 70B. They have a simpler and more efficient design, avoiding unnecessary loops and nesting, which makes them better for handling large graphs and easier to maintain over time.

content

I went to create an email to make an appointment with some community friends and asked :

please write email appointment to my friend to schedule meeting Reflection 70 B

Subject: Let's Catch Up - Appointment Request

Dear [Friend's Name],

I hope this email finds you well. It's been a while since we last spoke,

and I'd love to catch up with you. I was thinking of scheduling a meeting

at your earliest convenience.

Would you be available to meet at the coffee shop downtown next week?

I've got a few options in mind:

Monday at 2 PM

Wednesday at 10 AM

Friday at 3:30 PM

If none of these times suit you, please don't hesitate to suggest an alternative that works better for your schedule.

I'm really looking forward to hearing back from you and catching up.

Best regards, [Your Name]GPT-4o

Subject: Scheduling a Meeting

Hi [Friend's Name],

I hope you're doing well! I wanted to reach out and see if we could

schedule a meeting sometime soon. I’d love to catch up and discuss

[mention any specific topic if needed].

Would [suggest a date and time] work for you? If not, let me know your

availability, and we can figure out a time that works for both of us.

Looking forward to hearing from you!

Best,

[Your Name]LLama 3.1–70B

Here is an example email to schedule a meeting with your friend:

Subject: Let's Catch Up - Meeting Invitation

Dear [Friend's Name],

Hope this email finds you well! I was thinking it's been a while

since we last caught up, and I'd love to schedule a meeting to chat

about everything that's been going on.

Would you be available to meet at your convenience? I was thinking

we could grab coffee or lunch sometime this week or next. Here are a

few dates and times that work for me:

[Insert dates and times, e.g. Wednesday at 2 PM, Friday at 10 AM, etc.]

Let me know which one works best for you, or if you have a different time

in mind. I'm flexible and just want to make sure we can catch up soon.

Looking forward to hearing back from you and seeing you soon!

Best, [Your Name]

As you can see in the result from Reflection 70B, it presents a specific date, which, in my personal view, is not a good way to write an email as it might feel restrictive. GPT-4o and LLama 3.1–70B offer more flexibility, which can be perceived as more polite and considerate, especially if the recipient prefers to have a say in scheduling

Mathematics

I went to Google search, and I typed “math exercises probability data science easy questions,” and I picked one easy question for the test

Consider a tetrahedral die and roll it twice. What is the probability that the number on the first roll is strictly higher than the number on the second roll?Reflection 70 B

<thinking>

First, let's consider a tetrahedral die. A tetrahedron has 4 faces. So, a tetrahedral die has 4 possible outcomes when rolled: 1, 2, 3, and 4.

We need to find the probability of rolling a higher number on the first roll than the second roll.

To do this, we can list out all the favorable outcomes:

First roll: 2, Second roll: 1

First roll: 3, Second roll: 1 or 2

First roll: 4, Second roll: 1, 2, or 3

Now, let's count the total number of ways this can happen:

1 way for (2,1)

2 ways for (3,1) and (3,2)

3 ways for (4,1), (4,2), and (4,3) Total favorable outcomes: 1 + 2 + 3 = 6

The total number of possible outcomes when rolling twice is 4 * 4 = 16

So, the probability is 6/16 = 3/8

<reflection> Let's verify this result:

It makes sense that we don't include cases where the numbers are equal, as the question asks for "strictly higher".

We've considered all possibilities: 2 > 1, 3 > 1 and 3 > 2, 4 > 1, 4 > 2, and 4 > 3.

The calculation is correct: 3/8 is indeed the simplest form of 6/16. This reasoning seems correct. </reflection> </thinking>

<output> The probability that the number on the first roll is strictly higher than the number on the second roll when using a tetrahedral die is 3/8 or approximately 0.375. </output>GPT-4o

Let's calculate the probability step by step.

A tetrahedral die has 4 faces numbered 1, 2, 3, and 4. When rolling the die twice, we want to determine the probability that the number rolled on the first die is strictly higher than the number rolled on the second die.

Step 1: Determine the total number of possible outcomes.

Each roll can result in one of 4 possible numbers, and since we are rolling the die twice, the total number of outcomes is:

4×4=16

So, there are 16 possible outcomes when rolling the die twice.

Step 2: Identify favorable outcomes.

We need to count the cases where the first number is strictly greater than the second number. These cases are:

(2, 1)

(3, 1), (3, 2)

(4, 1), (4, 2), (4, 3)

Thus, there are 6 favorable outcomes: (2, 1), (3, 1), (3, 2), (4, 1), (4, 2), (4, 3).

Step 3: Calculate the probability.

The probability is the ratio of favorable outcomes to total outcomes:

6/16 = 3/8

Thus, the probability that the number on the first roll is strictly higher than the number on the second roll is

3/8

.LLama 3.1–70B

Step 1: Determine the possible outcomes for each roll of the tetrahedral die.

A tetrahedral die has 4 faces, so there are 4 possible outcomes for each roll. The outcomes can be numbered from 1 to 4.

Step 2: Enumerate the pairs of rolls where the first roll is strictly higher than the second roll.

To calculate the probability, we need to identify all combinations where the first roll is higher than the second. These pairs are: (2,1), (3,1), (3,2), (4,1), (4,2), and (4,3).

Step 3: Calculate the total number of possible outcomes for two rolls.

Since each roll has 4 possible outcomes, the total number of outcomes for two rolls is 4 * 4 = 16.

Step 4: Calculate the probability.

The probability is the number of favorable outcomes (where the first roll is strictly higher than the second) divided by the total number of possible outcomes.

Step 5: Determine the number of favorable outcomes.

From Step 2, we identified 6 favorable outcomes.

Step 6: Calculate the probability using the numbers from Steps 3 and 5.

Probability = Number of favorable outcomes / Total number of possible outcomes = 6 / 16 = 3 / 8.All the models get the answer correct, but GPT-4o is the best solution. It provides a detailed and clear step-by-step explanation, making it easy to follow and understand. While GPT-4o and LLama 3.1–70B are effective, GPT-4o’s answer is more concise and straightforward. However, if verification and accuracy are a priority, Reflection70B is also a strong contender because it includes a verification step to ensure correctness.

Conclusion :

I have seen many YouTubers and influencers talking about Reflection70B. Out of curiosity, I decided to try it out. I found that Reflection70B doesn’t surpass LLama 3.1–70B or GPT-40. It’s not even on the same level as these models. The new techniques added don’t seem to make the model more robust.

I’m also wondering why Meta hasn’t added Reflection-Tuning to their models if it could help improve performance. It’s hard to say for now, but hopefully, HyperWrite will enhance their model to generate higher-quality outputs.

Reference :

🧙♂️ I am an AI Generative expert! If you want to collaborate on a project, drop an inquiry here or Book a 1-on-1 Consulting Call With Me.