Scrape Anything With DeepSeek R1 V2 + Minion-Agent (FREE & EASY)

In this story, I have a quick tutorial showing how to create a powerful chatbot using Minion-Agent, Smolagents, and Deepseek–R1-0528 to develop an AI agent capable of scraping any website you choose. On top of that, you can ask questions about your data, which will give you responses to those questions.

During the weekend, I scroll through Twitter to see what happened in the AI community. One of the AI agent frameworks called Minion-Agent has drawn my attention. When AI is combined with tools, agents are no longer just concepts!

Please, before writing any negative comments, go and test it. This video is not clickbait or a sponsored video

This agent framework, which integrates multiple functions such as browser operation, MCP (Model Context Protocol), automatic tool calling, task planning and in-depth research, is becoming the focus of the AI field with its powerful automation capabilities and flexible application scenarios.

The core value of Minion-Agent is that it elegantly solves the problem of “framework fragmentation”. Suppose developers want to develop an AI agent.

In that case, they need to switch between multiple frameworks such as OpenAI, LangChain, Google AI and SmolaAgents, because each framework has its unique advantages and limitations, which is also the main obstacle in the current development of AI agents.

As my workday was about to end, I saw that DeepSeek announced a minor version upgrade of its R1 series reasoning model. The latest version, DeepSeek–R1–0528, has 685 billion parameters, and the model’s ability in thinking depth and reasoning has been significantly improved.

DeepSeek-R1–0528 still uses the DeepSeek V3 Base model released in December 2024 as the base. Still, it invests more computing power in the post-training process, significantly improving the model’s thinking depth and reasoning ability.

The updated R1 model has achieved outstanding results among all current domestic models in multiple benchmark tests, including mathematics, programming, and general logic. Its overall performance is comparable to that of other top international models, such as O3 and Gemini-2.5-Pro.

So, let me give you a quick demo of a live chatbot to show you what I mean.

I will ask the chatbot a question: “I want to buy the MEK AI-Enhanced Gaming PC Desktop Computer. Help me find a better price and compare it with other laptops. Make the results in table form.”

If you take a look at how the chatbot generates the output, you’ll see that the agent research assistant uses the configured DeepSeek model and receives your query about finding better prices for the MEK gaming PC.

The agent’s planning system kicks in every three steps to strategise the research approach — first, it needs to understand the specific product specifications, then search for pricing across multiple retailers, and finally, compare it with similar laptops in a table format.

As it browses, the parse_tool_args_if_needed function ensures all the tool calls for web scraping and data extraction have properly formatted JSON arguments converted to Python dictionaries. The browser tool extracts product information, prices, and specifications, while the screenshot function maintains a visual record of each step, clearing old screenshots from memory to prevent overflow.

The agent continuously monitors its search results and self-corrects when initial approaches fail — for example, if searching for the exact “MEK AI-Enhanced Gaming PC” yields no results, it automatically pivots to broader search terms like “RTX 5090 desktop” to find comparable alternatives.

Once it gathers sufficient pricing data for the MEK PC and identifies comparable laptops with similar RTX 5090 and Ryzen 7 specs, the agent uses its HTML generation tools to create a structured comparison table. Throughout this process, the CodeAgent can execute Python code to format the data, calculate price differences, and organize the comparison table

Finally, it returns the completed research with both the better pricing options for your specific MEK PC and a comprehensive comparison table showing how it stacks up against similar gaming laptops in terms of specs, performance, and value.

Disclaimer: This article is only for educational purposes. We do not encourage anyone to scrape websites, especially those web properties that may have terms and conditions against such actions.

So, by the end of this story, you will understand what Minion-Agent is, how it works and even how we’re going to use Minion-Agent to create a powerful agentic chatbot

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

Like my article, which will really help me out.👏

Follow me on my YouTube channel

Subscribe to me to get the latest articles.

What is Minion-Agent?

Minion-agent is a simple yet powerful AI agent framework. Its goal is to enable users to complete complex tasks using AI technology easily. It integrates a variety of mainstream AI frameworks and tools, providing a unified platform so that you can get started without worrying about technical details. Its core features include:

Multi-framework support

minion-agent is compatible with a variety of mainstream AI frameworks, such as OpenAI, LangChain, Google AI and Smolagents. Through a unified interface, you can easily call the capabilities of different frameworks.Rich tool set

This framework has built-in various practical tools, such as web browsing, file operations, and task automation. It also supports extensions, and you can add custom tools as needed.Multi-agent collaboration

You can create multiple sub-agents and let them work together to complete tasks. Minion-agent will automatically distribute and manage the work of these agents.Intelligent web page operations

It integrates browser automation functions to complete complex web page tasks, such as data crawling or information extraction.Deep Research Capabilities

Minion-agent has a built-in DeepResearch agent that can conduct in-depth research around a topic and automatically organise and summarise information.

Simply put, Minion-Agent is like a versatile intelligent assistant that can adapt to different scenarios and needs.

How does it work?

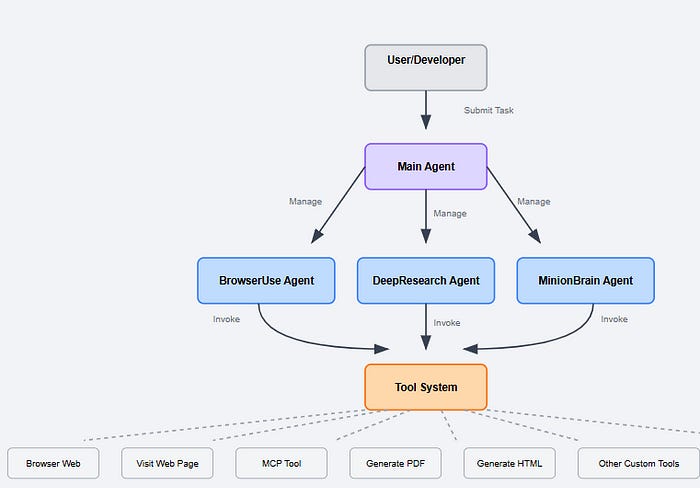

Judging from the flow chart, Minion-agent adopts a sophisticated “information flow” design pattern, realising a complete closed loop from user instructions to final results.

Browser Use Agent :

The BrowserUseAgent wraps the browser-use library to provide automated web browsing within the MinionAgent framework by dynamically loading LangChain models, lazy-initialising a Browser instance with Chrome, and executing tasks via run_async() which updates the agent's task prompt and conversation history with completion criteria before running browser automation, with the key innovation being dynamic task updating that allows the same agent instance to handle multiple different prompts by modifying its internal state for reusable browser automation.

link: Code

Deep Research Agent :

The DeepResearcher executes a multi-stage research workflow: it clarifies the topic through optional user dialogue, generates initial search queries via a planning LLM, performs parallel searches using Tavily with thread-safe caching, summarizes raw content asynchronously, enters an iterative research loop that evaluates completeness and generates additional queries within a budget constraint, deduplicates and filters results using LLM-based relevance ranking, and finally synthesizes a comprehensive answer using a specialized model (DeepSeek-R1-Distill-Llama-70B) with optional interactive feedback for refinement.

link: Code

Minion Brain:

The An MinionAgent An abstract base class provides a unified interface for different agent frameworks through a factory pattern that dynamically imports specific implementations (Smolagents, LangChain, OpenAI, LlamaIndex, Google, MinionBrain, BrowserUse, DeepResearch), handles sync/async execution with nest_asyncio for nested event loops, and makes instances callable via __call__ delegation to run()defines abstract methods _load_agent(), run_async(), and tools that subclasses must implement, prevents direct instantiation, and restricts access to underlying agent implementations to maintain framework abstraction.

link: Code

Let’s start coding?

Before we dive into our application, we will create an ideal environment for the code to work. For this, we need to install the necessary Python libraries.

First, we will install the libraries that support the model. For this, we will do a pip install requirements. Since the demo uses the DeepSeek models, you must first set the DeepSeek API Key.

pip install requirementsThe next step is the usual one, where we will import the relevant libraries, the significance of which will become evident as we proceed.

We initiate the code by importing classes from

smolagents, which is an agent framework that simplifies creating agents with just a few lines of code. It’s a powerful yet lightweight tool.

Minion_agent is a new open-source AI agent development framework that can solve the framework fragmentation problem in the current AI agent development. By integrating multiple AI frameworks,

"""Example usage of Minion Agent."""

import asyncio

from dotenv import load_dotenv

import os

from PIL import Image

from io import BytesIO

from time import sleep

from smolagents import ChatMessage

from smolagents.models import parse_json_if_needed

from minion_agent import MinionAgent, AgentConfig, AgentFramework

from smolagents import CodeAgent, ActionStepthey create a parse_tool_args_if_needed message object of type ChatMessage and check if it has any tool calls associated with it. If there are tool calls, it loops through each one.

For every tool call, it accesses the function. arguments attribute, which is expected to be a string containing JSON data, and passes it to the helper function parse_json_if_needed, which likely tries to convert that JSON string into a Python dictionary (if it isn't already).

After parsing, it updates the function.arguments field with the parsed result. Once all tool calls have been processed and their arguments potentially parsed, the function returns the updated message object.

def parse_tool_args_if_needed(message: ChatMessage) -> ChatMessage:

for tool_call in message.tool_calls:

tool_call.function.arguments = parse_json_if_needed(tool_call.function.arguments)

return messageThen they made a save_screenshot function to capture a screenshot of a browser page using Playwright during a specific memory step in an agent’s workflow.

First, it waits for a 1-second sleep(1.0) to allow any animations or JavaScript transitions to complete before capturing the image. It then accesses the browser tool from the agent’s list of tools. If the browser tool is available, the function clears out old screenshots from memory, steps that are at least two steps earlier than the current one, to conserve memory.

Next, it uses the browser tool to take a screenshot (action="screenshot"), If the screenshot was successful and included in the result, it converts the returned byte data into a PIL image and stores a copy in the current memory step observations_images so that it can persist for later use.

After that, it fetches the current browser state action="get_current_state" to extract the current URL. If successful, it either sets or appends the URL string to the memory steps observations depending on whether it already has observations recorded.

# Set up screenshot callback for Playwright

def save_screenshot(memory_step: ActionStep, agent: CodeAgent) -> None:

sleep(1.0) # Let JavaScript animations happen before taking the screenshot

# Get the browser tool

browser_tool = agent.tools.get("browser")

if browser_tool:

# Clean up old screenshots to save memory

for previous_memory_step in agent.memory.steps:

if isinstance(previous_memory_step, ActionStep) and previous_memory_step.step_number <= memory_step.step_number - 2:

previous_memory_step.observations_images = None

# Take screenshot using Playwright

result = browser_tool(action="screenshot")

if result["success"] and "screenshot" in result.get("data", {}):

# Convert bytes to PIL Image

screenshot_bytes = result["data"]["screenshot"]

image = Image.open(BytesIO(screenshot_bytes))

print(f"Captured a browser screenshot: {image.size} pixels")

memory_step.observations_images = [image.copy()] # Create a copy to ensure it persists

# Get current URL

state_result = browser_tool(action="get_current_state")

if state_result["success"] and "url" in state_result.get("data", {}):

url_info = f"Current url: {state_result['data']['url']}"

memory_step.observations = (

url_info if memory_step.observations is None else memory_step.observations + "\n" + url_info

)"urlAfter that, they configure an AI agent using the minion_agent framework with Deepseek as the LLM. The agent is set up as a “research_assistant” CodeAgent that can execute code and has access to browser automation, PDF generation, HTML generation tools, plus the ability to save and generate HTML files.

It uses the Google API key from environment variables for authentication, allows all imports via the wildcard “*”, runs planning every 3 steps, and includes a screenshot callback function that likely captures the agent’s browser state during execution.

The commented model_type suggests this was originally configured for a different model but switched to DeepSeek, and MCPTool was removed to prevent connection problems.

# Configure the agent

agent_config = AgentConfig(

model_id="deepseek/deepseek-reasoner",

name="research_assistant",

description="A helpful research assistant",

model_args={

"api_key": os.environ.get("GOOGLE_API_KEY"),

},

tools=[

"minion_agent.tools.browser_tool.browser",

"minion_agent.tools.generation.generate_pdf",

"minion_agent.tools.generation.generate_html",

"minion_agent.tools.generation.save_and_generate_html",

# MCPTool removed to avoid connection issues

],

agent_type="CodeAgent",

# model_type="GoogleModel", # Changed to GoogleModel for Gemini

agent_args={

"additional_authorized_imports": "*",

"planning_interval": 3,

"step_callbacks": [save_screenshot]

}

)So, they set up an AI agent configuration using the AgentConfig class and prepared it to act as a research assistant. The agent is named "research_assistant" and described as "A helpful research assistant".

It uses the Deepseek model and retrieves the API key from the environment variable DEEPSEEK_API_KEY under model_args, which provides credentials for using the model.

The agent is equipped with a set of tools listed in the tools section, including a browser tool for web browsing and several generation tools for creating PDFs and HTML content. The "MCPTool" has been removed from the configuration to prevent connection problems.

The agent type is specified as"CodeAgent", and under agent_args Two behaviours are defined: "additional_authorized_imports": "*" allows unrestricted module imports, and "planning_interval": 3 means the agent will plan every 3 steps.

It also sets up step_callbacks, specifically the save_screenshot function, so that after each step, it captures a screenshot of the browser if applicable.

managed_agents = [

AgentConfig(

name="search_web_agent",

model_id="deepseek/deepseek-reasoner", # Use Gemini for consistency

description="Agent that can use the browser, search the web, navigate",

tools=["minion_agent.tools.browser_tool.browser"],

model_args={

"api_key": os.environ.get("GOOGLE_API_KEY"),

},

# model_type="GoogleModel", # Changed to GoogleModel

agent_type="ToolCallingAgent",

),

AgentConfig(

name="visit_webpage_agent",

model_id="deepseek/deepseek-reasoner",

description="Agent that can visit webpages",

tools=["minion_agent.tools.web_browsing.visit_webpage"],

model_args={

"api_key": os.environ.get("GOOGLE_API_KEY"),

},

)

]The main function is written to run asynchronously, which means it can handle tasks like web browsing or searching without freezing the program. First, it tries to create the assistant using a special setup (agent_config)and a framework called SMOLAGENTS.

Once the assistant is ready, it’s given a job: look for a better price on a MEK AI-Enhanced Gaming PC and compare it with other laptops, showing everything in a table. If something goes wrong during the process, it catches the error and prints out what happened.

At the end, the script starts everything by running the main() function with asyncio.run(). It’s a clean way to let an AI do some smart research for you and give back a simple, helpful result.

async def main():

try:

# Create and run the agent

agent = await MinionAgent.create(AgentFramework.SMOLAGENTS, agent_config)

result = agent.run(

"i want buy MEK AI-Enhanced Gaming PC Desktop Computer -" \

" NVIDIA GeForce RTX 5090, AMD Ryzen 7 9700X 5.5GHz, 32GB DDR5 RGB, 2TB NVME M.2 SSD, 1300W 80+ Gold PSU, WiFi 7, Windows 11 "

"help me to find a better price and compare with other laptop make them in table form."

)

print("Agent's response:", result)

print("Done!")

except Exception as e:

print(f"Error: {str(e)}")

raise

if __name__ == "__main__":

asyncio.run(main())Conclusion :

The emergence of the Minion agent has injected new vitality into the field of AI agent development.

It is not only a technical framework, but also represents an open and collaborative development concept. In this era of rapid iteration of AI technology, the flexibility of open source projects and the community collaboration model may be the best solution to cope with complex changes.

🧙♂️ I am an AI Generative expert! If you want to collaborate on a project, drop an inquiry here or Book a 1-on-1 Consulting Call With Me.