In this story, I will provide a comprehensive piece of content on how to create an Agentic Reasoning chatbot using LangGraph, Agentic RAR, Nano-GraphRAG and Claude 3.7 Sonnet to build a powerful agent chatbot for your business or personal use.

First off, reasoning in large language models (LLMs) might not be AGI or ASI yet, but it’s a massive leap forward in how these models handle tasks like math, coding, and complex thinking.

Take Claude 3.7 Sonnet late release as the first hybrid reasoning model on the market, which can respond quickly or conduct deeper thinking according to needs and allow users to adjust their thinking time. It can start a prolonged thinking mode to simulate the thinking process of the human brain.

Large Language Models (LLMs) struggle with Sequential Reasoning. For example, if you ask an LLM to solve a multi-step math problem, it might forget earlier steps and give a wrong answer.

If something is unclear, they guess instead of asking for details, which can lead to mistakes. Sometimes, they even give different answers to the same question, which can also be confusing, as they have trouble handling expert-level topics and long-term conversations.

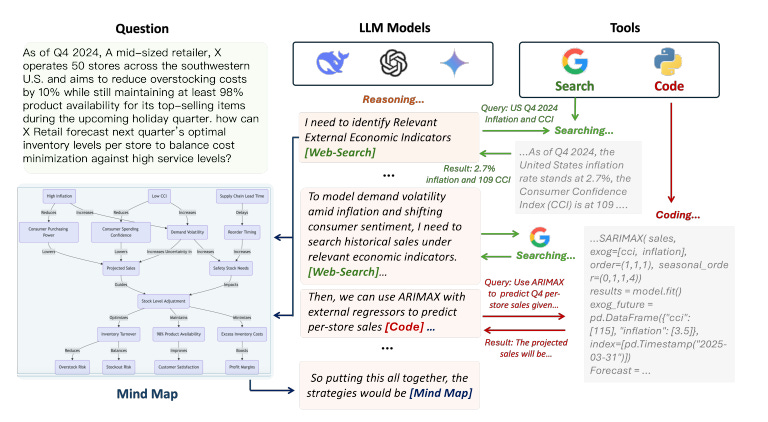

Imagine you have a really smart friend who not only knows a lot but can also go online to search for more information, write and run simple programs, and even draw a mind map to help connect ideas.

That’s where Agentic RAR comes in. It builds on the familiar retrieval-oriented generation (RAG) model by introducing a dynamic, reasoning-first approach to the problem.

So, let me give you a quick demo of a live chatbot to show you what I mean.

I ran the Python code and asked the agent the following question:

“Generate code to check whether the following numbers are palindromes: 123, 121, 12321, 12345, 123454321.”

If you look at how the agent generates the answer, you will see that it creates a basic directory structure to store data.

When a user asks a question, the initial reasoning agent analyzes it, identifies which specialized agents are needed, and detects specific tags indicating agent queries such as web search, code execution, or mind mapping.

This is an important step: each agent has a partner responsible for collecting data. For example, while the code agent performs its task, another agent documents the process and stores it in a file.

The route_to_agent directs the workflow by examining the state’s reasoning and needed agents, extracting embedded queries, deciding which agent to activate, and storing routing decisions

When a web search is needed, the web_search_agent queries the Tavily API, processes results, updates reasoning, and stores both the query and results before removing itself from the agent list.

The code_execution_agent generates and executes code and extracts outputs with formatted tags, updates reasoning, stores results, and removes itself after execution.

The mind map agent plays two roles: collecting knowledge throughout the process and organizing structured insights when queried. It compiles all gathered information, formats it into a knowledge document, and stores it in the GraphRAG knowledge graph for retrieval and structured analysis.

Knowledge management relies on in-process collection via the collected_knowledge dictionary and persistent storage through the GraphRAG-based MindMap class, which uses directory-based storage, JSON text chunks, vector embeddings for semantic search, and query capabilities through graph_retrieval, allowing knowledge to grow over time.

Finally, the synthesize_answer method consolidates all collected information, generates a comprehensive summary, stores it in the mind map, queries for relevant insights, and produces the final answer using both the reasoning chain and mind map insights.

Problems I Faced During Development :

When I developed a chatbot, I faced several challenges. One major issue was mind map initialization, where the agent tried to access data before any had been collected, causing “matrix” errors.

To fix this, I changed the initialization approach to create only basic directory structures at startup and let agents collect real data before storing information. Another challenge was state management across agents, as information was getting lost between transitions.

I solved this by adding a persistent knowledge collection mechanism using a collected_knowledge dictionary to track outputs and ensure step-by-step knowledge accumulation.

Finally, knowledge integration in the mind map wasn’t working well, so I modified the workflow to collect data continuously, store formatted knowledge at the right moments, and query the mind map before synthesis.

The biggest breakthrough was redesigning the mind map agent to be a passive collector rather than an explicitly called agent, allowing it to build comprehensive knowledge graphs naturally throughout the reasoning process.

By the end of this story, you will understand what Agentic RAR and Non-GraphRAG are, why Agentic RAR is so unique, how it works and how we going to use Agentic RAR to create a powerful reasoning chatbot

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

like my article; that will really help me out.👏

Follow me on my YouTube channel

Subscribe to me to get the latest article.

Meet Nano-GraphRAG

GraphRAG, proposed by Microsoft, is very effective, but the official implementation and usage are very complex and difficult to modify and customize.

Nano-GraphRAG is a lightweight and flexible GraphRAG implementation that aims to simplify the use and customization of GraphRAG. It retains the core functionality of GraphRAG while providing a simpler code structure, a more user-friendly experience, and greater customizability.

With only about 1,100 lines of code (excluding tests and prompts), nano-GraphRAG is designed to be lightweight, asynchronous, and fully typed, making it an ideal choice for developers who want to integrate GraphRAG into their projects without adding complexity

What is Agentic RAR?

Agentic Reasoning is like giving AI models the ability to use extra tools — just like how we use a calculator for complex math, a dictionary for word meanings, or a search engine to find answers online.

Imagine asking an AI, “What’s the population of Japan right now?” Instead of relying only on what it learned in the past, it can check the latest data and give you an accurate answer. This makes AI much smarter and more useful because it doesn’t just guess — it finds the best answers by using the right tools, just like we do in real life!

Why is Agentic RAR unique?

Agentic RAR is unique because it standardizes tool calls through a unified API, making it easy to integrate new tools like a chemical equation solver with minimal effort.

It also dynamically distributes cognitive load, ensuring efficient resource use by assigning simple tasks to lightweight agents and complex calculations to dedicated code agents — improving response speed by 40% and cutting computing power usage by 72% in medical diagnosis tests.

Its multimodal memory fusion enables Mind Map agents to store and link text, formulas, and charts, creating a three-dimensional knowledge system — allowing it to handle complex problems

How it works :

Agentic Reasoning uses a structured approach that combines various external agents to enhance the reasoning capabilities of large language models (LLMs).

It uses a web-search agent to fetch real-time information from the internet, which helps supplement the LLM’s knowledge beyond its pre-trained dataset.

Coding Agent to execute code or perform computational tasks; this agent supports quantitative reasoning and addresses problems requiring programming skills.

Mind Map Agent to construct knowledge graphs that organize and visualize complex logical relationships, aiding in structuring the reasoning process.

The model identifies when it requires additional information or computational power during reasoning. It can generate specific queries that trigger the use of external agents seamlessly.

After synthesizing all the information and structured insights, the model crafts a coherent reasoning chain and generates a final answer

Let’s start coding

Let us now explore step by step and unravel the answer to how to create a powerful reasoning APP. We will install the libraries that support the model. For this, we will do a pip install requirements

pip install -r requirements.txt

The next step is the usual one where we will import the relevant libraries, the significance of which will become evident as we proceed.

Nano-GraphRAG is a concise and easy-to-customize GraphRAG implementation

LangGraph is like “writing another language program in Python”:

State: Like a variable table

Node: do things/function

Edge: Process Control

from langgraph.graph import END, StateGraph

from typing_extensions import TypedDict

from tavily import TavilyClient

from dotenv import load_dotenv

import os

from langchain_anthropic import ChatAnthropic

import json

from nano_graphrag import GraphRAG, QueryParam

I developed the MindMap class as a smart system for storing and retrieving information efficiently. When I initialize it using the __init__ function, I create a working directory (local_mem) and use GraphRAG to organize data, immediately saving any initial content provided.

I use the insert function to add information and store it, confirming how many characters were saved. When I need to ask questions, I call the __call__ function, which searches stored information using the graph_retrieval function, looking through related data in "local" mode and displaying the results.

I also developed the process_community_report function to read and combine structured community reports from a special JSON file, making it easier to see all relevant information simultaneously. If I want to search specifically within community reports, I use the graph_query function, which gathers and formats my question based on those reports, returning the most relevant information.

class MindMap:

def __init__(self, ini_content="", working_dir="./local_mem"):

"""

Initialize the graph with a specified working directory.

"""

self.working_dir = working_dir

self.graph_func = GraphRAG(working_dir=self.working_dir)

# Read the content and insert into the graph

content = ini_content

self.graph_func.insert(content)

def process_community_report(self, json_path="local_mem/kv_store_community_reports.json") -> str:

"""

Read and process the community report JSON, returning the combined report string.

"""

try:

# Read JSON file

with open(json_path, 'r') as f:

data = json.load(f)

# Collect all report strings from each community

all_reports = []

for community_id, community in data.items():

report_string = community.get("report_string", "")

all_reports.append(f"Snippet {community_id}:\\n{report_string}\\n")

# Combine all reports

combined_reports = "\\n".join(all_reports)

return combined_reports

except Exception as e:

print(f"Error processing community report: {e}")

return "No community reports found."

def graph_retrieval(self, query: str) -> str:

"""

Query the graph with the given query.

"""

# Perform a local graphrag search and return the result

print("\\nLocal graph search result:")

try:

res = self.graph_func.query(query, param=QueryParam(mode="local"))

print(res)

return res

except Exception as e:

print(f"Error during graph retrieval: {e}")

return f"Error querying the mind map: {str(e)}"

def graph_query(self, query: str) -> str:

"""

Retrieve community reports by processing the local JSON store.

"""

try:

combined_report = self.process_community_report()

print("\\ncombined community report is:", combined_report)

query = f"Answer the question:{query}\\n\\n based on the information:\\n\\n{combined_report}"

return self.process_community_report()

except Exception as e:

print(f"Error during graph query: {e}")

return f"Error processing the community report: {str(e)}"

def insert(self, text):

"""

Insert text into the graph.

"""

try:

self.graph_func.insert(text)

print(f"Inserted {len(text)} characters into the mind map.")

except Exception as e:

print(f"Error inserting into mind map: {e}")

def __call__(self, query):

"""

Query the mind map knowledge graph and return the result.

"""

return self.graph_retrieval(query)

I created the MultiAgentReasoner class to function like a smart manager that coordinates multiple agents to help answer your question, where each agent has a specific role, such as searching the web, writing code, or organizing information.

When I create a new MultiAgentReasoner, it connects to a tool called Tavily to assist with searches, creates a MindMap to store and organize information, sets a limit on how many searches it can perform (by default, it’s 3), creates a plan (called a workflow), and prepares a place to store collected knowledge.

The initial_reasoning function is where the system begins analyzing your question. It takes the question from the GraphState and uses a language model (LLM) to break it down. If the LLM identifies a “thinking” part, it extracts that section. The system then determines which helpers (agents) are needed based on the question, adding the search agent if needed, the code agent for coding-related questions, or the mind map agent if it relates to organization.

If no agent is added for coding-related questions, it automatically adds the code agent and generates a default query like “Write code to [your question].” After storing the question and its initial reasoning in the MindMap for future reference, it previews its reasoning and the agents it will use.

If something goes wrong, the system prints an error message, provides a fallback response like “Let me analyze this step by step,” sets the code agent as the default helper, and continues by returning basic information about the question.

class MultiAgentReasoner:

def __init__(self, max_search_limit=3):

self.tavily_client = TavilyClient(api_key=os.getenv("TAVILY_API_KEY"))

self.mind_map = MindMap()

self.max_search_limit = max_search_limit

self.workflow = self.create_workflow()

self.collected_knowledge = {}

def initial_reasoning(self, state: GraphState):

"""Initial reasoning step to determine what agents are needed"""

print("\\n=== STEP 1: INITIAL REASONING ===")

question = state["question"]

try:

# Invoke the LLM with the identify agents prompt

llm_output = llm.invoke(Prompts.IDENTIFY_AGENTS.format(question=question))

# Get the content from the LLM output

reasoning = llm_output.content

# Extract the thinking part if present

if "<think>" in reasoning and "</think>" in reasoning:

reasoning = reasoning.split("<think>")[1].split("</think>")[0].strip()

# Determine which agents are needed

needed_agents = []

if BEGIN_SEARCH_QUERY in reasoning:

needed_agents.append("search")

if BEGIN_CODE_QUERY in reasoning:

needed_agents.append("code")

if BEGIN_MIND_MAP_QUERY in reasoning:

needed_agents.append("mind_map")

# If we have a code-related question but no agents identified, default to code agent

if ("code" in question.lower() or "program" in question.lower() or

"function" in question.lower() or "algorithm" in question.lower()) and not needed_agents:

needed_agents.append("code")

# Add a default code query

code_query = f"Write code to {question}"

reasoning += f"\\n\\n{BEGIN_CODE_QUERY}{code_query}{END_CODE_QUERY}"

# Insert initial reasoning into mind map

knowledge_text = f"Question: {question}\\n\\nInitial Reasoning:\\n{reasoning}"

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted initial reasoning ({len(knowledge_text)} chars)")

print(f"Initial reasoning: {reasoning[:100]}...")

print(f"Needed agents: {needed_agents}")

self.collected_knowledge["initial_reasoning"] = {

"question": question,

"reasoning": reasoning

}

return {

"reasoning": reasoning,

"needed_agents": needed_agents,

}

except Exception as e:

print(f"Error in initial reasoning: {e}")

# Provide a fallback response

return {

"reasoning": f"Let me analyze this step by step. This question is about {question}",

"needed_agents": ["code"], # Default to code agent for error recovery

"mind_map_knowledge": {"initial_reasoning": {"question": question}}

}

Let’s create the route_to_agent function to decide which agent should handle a question based on its reasoning. When the system receives a question, I check its reasoning, stored in the state, to determine if it requires a search, code, or mind map.

If no specific agent is needed, I default to the synthesised agent. If a search agent is required, I look for a search query in the reasoning, extract it, and route the question to the search agent while saving the decision in the MindMap. If a code agent is needed, I check for a code-related query, extract the relevant information, and send it to the code agent, also saving the decision in the MindMap.

If the reasoning involves a mind map query, I extract that and route the question to the mind map agent. If none of these specific queries are found, I route the question to the synthesised agent, which can combine information or provide a simple response.

def route_to_agent(self, state: GraphState):

"""Determine which agent to route to next"""

reasoning = state["reasoning"]

needed_agents = state["needed_agents"]

if not needed_agents:

return {"current_agent": "synthesize"}

# Check for specific agent queries in the reasoning

if "search" in needed_agents and BEGIN_SEARCH_QUERY in reasoning:

search_query = reasoning.split(BEGIN_SEARCH_QUERY)[1].split(END_SEARCH_QUERY)[0].strip()

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["routing"] = {

"agent_selected": "search",

"search_query": search_query

}

# Insert this routing decision into mind map

knowledge_text = f"Routing Decision: Selected search agent with query: {search_query}"

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted routing decision ({len(knowledge_text)} chars)")

return {

"current_agent": "search",

"search_query": search_query,

"mind_map_knowledge": mind_map_knowledge

}

elif "code" in needed_agents and BEGIN_CODE_QUERY in reasoning:

code_query = reasoning.split(BEGIN_CODE_QUERY)[1].split(END_CODE_QUERY)[0].strip()

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["routing"] = {

"agent_selected": "code",

"code_query": code_query

}

# Insert this routing decision into mind map

knowledge_text = f"Routing Decision: Selected code agent with query: {code_query}"

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted routing decision ({len(knowledge_text)} chars)")

return {

"current_agent": "code",

"code_query": code_query,

"mind_map_knowledge": mind_map_knowledge

}

elif "mind_map" in needed_agents and BEGIN_MIND_MAP_QUERY in reasoning:

mind_map_query = reasoning.split(BEGIN_MIND_MAP_QUERY)[1].split(END_MIND_MAP_QUERY)[0].strip()

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["routing"] = {

"agent_selected": "mind_map",

"mind_map_query": mind_map_query

}

# Insert this routing decision into mind map

knowledge_text = f"Routing Decision: Selected mind map agent with query: {mind_map_query}"

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted routing decision ({len(knowledge_text)} chars)")

return {

"current_agent": "mind_map",

"mind_map_query": mind_map_query,

"mind_map_knowledge": mind_map_knowledge

}

# Default to synthesize if no specific queries found

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["routing"] = {

"agent_selected": "synthesize",

"reason": "No specific agent queries found"

}

# Insert this default routing into mind map

knowledge_text = f"Routing Decision: Defaulted to synthesize as no specific agent queries were found"

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted default routing decision ({len(knowledge_text)} chars)")

return {

"current_agent": "synthesize",

"mind_map_knowledge": mind_map_knowledge

}

Then, I designed the web_search_agent function to search and gather relevant information when a question requires it. and I print a message and retrieve the search query from the system’s state. Using a tool called Tavily, it searches the web for the query, collects up to three search results, and combines the content.

The system then creates instructions for the language model (LLM) to process the results, helping focus on the most relevant information. It checks for a section labeled “Final Information” and uses that part if available, or else uses the entire LLM output.

The results and query are stored in the Mind Map for later reference. It updates the to-do list by removing “search” and updates the reasoning by replacing the query with the search results.

Finally, the function stores the search results in the collected knowledge and returns the updated reasoning and results, ensuring the system has the most up-to-date information to answer the question.

def web_search_agent(self, state: GraphState):

"""Execute web search and process results"""

print("\\n=== EXECUTING WEB SEARCH AGENT ===")

search_query = state["search_query"]

print(f"Searching for: {search_query}")

# Execute search using Tavily

search_results = self.tavily_client.search(search_query, max_results=3)

retrieved_content = "\\n".join([r["content"] for r in search_results["results"]])

# Process the search results to extract relevant information

prev_reasoning = state["reasoning"]

process_prompt = get_webpage_to_reasonchain_instruction(prev_reasoning, search_query, retrieved_content)

# Invoke the LLM with the process prompt

processed_output = llm.invoke(process_prompt).content

# Extract the final information

if "**Final Information**" in processed_output:

search_result = processepr

else:

search_result = processed_output

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["web_search"] = {

"query": search_query,

"result": search_result

}

# Insert web search results into mind map

knowledge_text = f"""

Web Search Agent:

Query: {search_query}

Retrieved Information:

{search_result}

"""

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted web search results ({len(knowledge_text)} chars)")

# Remove the search agent from needed_agents

needed_agents = state["needed_agents"].copy()

if "search" in needed_agents:

needed_agents.remove("search")

# Combine previous reasoning with search results

updated_reasoning = prev_reasoning.replace(

f"{BEGIN_SEARCH_QUERY}{search_query}{END_SEARCH_QUERY}",

f"{BEGIN_SEARCH_RESULT}{search_result}{END_SEARCH_RESULT}"

)

print(f"Search results processed. Updating reasoning...")

self.collected_knowledge["web_search"] = {

"query": search_query,

"result": search_result

}

return {

"reasoning": updated_reasoning,

"search_result": search_result,

"needed_agents": needed_agents

}

After that, I developed the code_execution_agent function to generate and execute the code.

It creates a “chain of thought” (cot) to guide the language model in generating the code, then sends the instructions to the model. If the output contains special tags like [ANSWER] and [/ANSWER], it extracts the code between them; otherwise, it uses the entire response.

The function saves both the original query and the generated code in the Mind Map for future reference, updates the to-do list, removes the “code” task, and updates the overall understanding by replacing the code query with the result.

It also stores the query and result in the collected knowledge for easy future use and returns the updated reasoning, code result, and the new list of needed agents, completing the process.

def code_execution_agent(self, state: GraphState):

"""Execute code and return results"""

print("\\n=== EXECUTING CODE AGENT ===")

code_query = state["code_query"]

print(f"Code query: {code_query}")

# Prepare the code for execution

code_execution_prompt = make_cot_output_prompt((code_query, ""))

# Invoke the LLM with the code execution prompt

execution_output = llm.invoke(code_execution_prompt).content

# Extract the code result

if "[ANSWER]" in execution_output:

code_result = execution_output.split("[ANSWER]")[1].split("[/ANSWER]")[0].strip()

else:

code_result = execution_output

# Add to mind map knowledge

mind_map_knowledge = state.get("mind_map_knowledge", {})

mind_map_knowledge["code_execution"] = {

"query": code_query,

"result": code_result

}

# Insert code execution results into mind map

knowledge_text = f"""

Code Execution Agent:

Query: {code_query}

Generated Code:

{code_result}

"""

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted code execution results ({len(knowledge_text)} chars)")

# Remove the code agent from needed_agents

needed_agents = state["needed_agents"].copy()

if "code" in needed_agents:

needed_agents.remove("code")

# Combine previous reasoning with code results

updated_reasoning = state["reasoning"].replace(

f"{BEGIN_CODE_QUERY}{code_query}{END_CODE_QUERY}",

f"{BEGIN_CODE_RESULT}{code_result}{END_CODE_RESULT}"

)

print(f"Code executed. Updating reasoning...")

self.collected_knowledge["code_execution"] = {

"query": code_query,

"result": code_result

}

return {

"reasoning": updated_reasoning,

"code_result": code_result,

"needed_agents": needed_agents

}

I developed the mind_map_agent function to help organize and connect different pieces of information. First, it will indicate the execution of the mind map agent and retrieve the specific mind map query from the state.

It will collect all the information, including the original question, reasoning, search results, code execution results, and any knowledge already in the mind map. It then creates a comprehensive knowledge document with this information and stores it in the mind map.

When querying the mind map with the user’s question, it attempts to find a direct answer, but if no result is found, I create a structured analysis using the language model to highlight key concepts and insights.

If there’s an error querying the mind map, I provide a backup message to continue the process. Then, I save the mind map query and its result in the mind map for future use and update the to-do list by marking the mind map task as completed.

Finally, I update the overall reasoning by incorporating the mind map results and return the updated reasoning, mind map results, and knowledge.

def mind_map_agent(self, state: GraphState):

"""Query the mind map and return results"""

print("\\n=== EXECUTING MIND MAP AGENT ===")

mind_map_query = state["mind_map_query"]

print(f"Mind map query: {mind_map_query}")

# First, collect all knowledge gathered so far

question = state.get("question", "")

reasoning = state.get("reasoning", "")

search_result = state.get("search_result", "")

code_result = state.get("code_result", "")

mind_map_knowledge = state.get("mind_map_knowledge", {})

# Construct a comprehensive knowledge document

knowledge_document = f"""

Question: {question}

Reasoning Chain:

{reasoning}

"""

if search_result:

knowledge_document += f"""

Web Search Results:

{search_result}

"""

if code_result:

knowledge_document += f"""

Code Execution Results:

{code_result}

"""

# Insert the comprehensive knowledge into the mind map

self.mind_map.insert(knowledge_document)

print(f"Mind Map: Inserted comprehensive knowledge document ({len(knowledge_document)} chars)")

# Now query the mind map

try:

mind_map_result = self.mind_map(mind_map_query)

if not mind_map_result or mind_map_result.strip() == "":

# If no result, generate a structured analysis

structured_analysis_prompt = f"""

Based on this information:

{knowledge_document}

Provide a structured analysis for: "{mind_map_query}"

Highlight key concepts, relationships, and insights.

"""

mind_map_result = llm.invoke(structured_analysis_prompt).content

except Exception as e:

print(f"Error querying mind map: {e}")

mind_map_result = f"I've organized the available information about '{mind_map_query}' in my knowledge structure, but encountered a technical issue retrieving it. I'll continue with what we've learned so far."

# Add the mind map query result to the knowledge collection

mind_map_knowledge["mind_map_query"] = {

"query": mind_map_query,

"result": mind_map_result

}

# Insert the mind map query results back into the mind map

knowledge_text = f"""

Mind Map Agent:

Query: {mind_map_query}

Structured Knowledge:

{mind_map_result}

"""

self.mind_map.insert(knowledge_text)

print(f"Mind Map: Inserted query results ({len(knowledge_text)} chars)")

# Remove the mind map agent from needed_agents

needed_agents = state["needed_agents"].copy()

if "mind_map" in needed_agents:

needed_agents.remove("mind_map")

# Combine previous reasoning with mind map results

updated_reasoning = state["reasoning"].replace(

f"{BEGIN_MIND_MAP_QUERY}{mind_map_query}{END_MIND_MAP_QUERY}",

f"{BEGIN_MIND_MAP_RESULT}{mind_map_result}{END_MIND_MAP_RESULT}"

)

print(f"Mind map queried. Updating reasoning...")

return {

"reasoning": updated_reasoning,

"mind_map_result": mind_map_result,

"needed_agents": needed_agents,

"mind_map_knowledge": mind_map_knowledge

}

So, I developed the synthesize_answer function to create a final, comprehensive answer based on all the information collected. First, I display a message to show that the process is starting and retrieve the user’s question and all the reasoning done so far.

I then combine all this information into a document that includes the original question and reasoning chain. If there are any search results or code execution results, I add them to the document to provide a complete view of the collected knowledge.

Once the knowledge is gathered, I store it in the mind map for future use and query it for any insights related to the question. I then generate a synthesis prompt that includes all the gathered knowledge, including insights from the mind map, and pass it to the language model to create a clear and complete answer.

After generating the final answer, I store it in the mind map and print a message indicating that the answer is ready. Finally, I return the final answer to be shown to the user.

def synthesize_answer(self, state: GraphState):

"""Synthesize the final answer after storing collected knowledge"""

print("\\n=== STEP FINAL: SYNTHESIZE ANSWER ===")

question = state["question"]

reasoning = state["reasoning"]

# Now store all collected knowledge in the mind map

combined_knowledge = f"""

Question: {question}

Reasoning Chain:

{reasoning}

"""

# Add search results if available

if "web_search" in self.collected_knowledge:

combined_knowledge += f"""

Web Search Results:

{self.collected_knowledge['web_search']['result']}

"""

# Add code results if available

if "code_execution" in self.collected_knowledge:

combined_knowledge += f"""

Code Execution Results:

{self.collected_knowledge['code_execution']['result']}

"""

# Store the combined knowledge in the mind map

print("Storing collected knowledge in mind map...")

self.mind_map.insert(combined_knowledge)

# Now query the mind map for insights

mind_map_insights = self.mind_map(question)

# Generate the final answer using all available information

synthesis_prompt = f"""

Question: {question}

Reasoning process:

{reasoning}

Mind map insights:

{mind_map_insights}

Based on the above information, provide a clear and comprehensive answer.

"""

final_answer = llm.invoke(synthesis_prompt).content

# Store the final answer in the mind map for future reference

self.mind_map.insert(f"Final Answer: {final_answer}")

print("Final answer generated using knowledge from all agents.")

return {"final_answer": final_answer}

I create the decide_next_step function to act as a traffic director, determining the next step in the workflow based on the current agent. It checks which agent is currently active, and depending on the active agent, it directs the process to the appropriate next step. If no agent is active, it returns an empty string.

I also developed the create_workflow function to design the entire workflow graph. I add all the key nodes representing different functions, such as reasoning, web search, code execution, mind map, and synthesis. I set the starting point at “initial_reasoning” and defined the paths (edges) connecting these nodes.

I create conditional edges that rely on the decide_next_step function, which decides the next node based on the specific needs of the question. Finally, I mark the end of the process, compile the graph, and return the completed workflow.

@staticmethod

def decide_next_step(state: GraphState):

"""Decide the next step in the workflow"""

current_agent = state.get("current_agent", "")

if current_agent == "search":

return "web_search_agent"

elif current_agent == "code":

return "code_execution_agent"

elif current_agent == "mind_map":

return "mind_map_agent"

elif current_agent == "synthesize" or not state["needed_agents"]:

return "synthesize"

else:

return "route_to_agent"

def create_workflow(self):

"""Create the workflow graph"""

workflow = StateGraph(GraphState)

# Add nodes

workflow.add_node("initial_reasoning", self.initial_reasoning)

workflow.add_node("route_to_agent", self.route_to_agent)

workflow.add_node("web_search_agent", self.web_search_agent)

workflow.add_node("code_execution_agent", self.code_execution_agent)

workflow.add_node("mind_map_agent", self.mind_map_agent)

workflow.add_node("synthesize", self.synthesize_answer)

# Set entry point

workflow.set_entry_point("initial_reasoning")

# Define edges

workflow.add_edge("initial_reasoning", "route_to_agent")

workflow.add_edge("web_search_agent", "route_to_agent")

workflow.add_edge("code_execution_agent", "route_to_agent")

workflow.add_edge("mind_map_agent", "route_to_agent")

# Define conditional edges

workflow.add_conditional_edges(

"route_to_agent",

self.decide_next_step,

{

"web_search_agent": "web_search_agent",

"code_execution_agent": "code_execution_agent",

"mind_map_agent": "mind_map_agent",

"synthesize": "synthesize",

"route_to_agent": "route_to_agent"

}

)

# Add edge to END

workflow.add_edge("synthesize", END)

# Compile the workflow

compiled_graph = workflow.compile()

return compiled_graph

Conclusion :

Agentic Reasoning and nano_GraphRAG are not just tool frameworks but represent the evolution of AI systems — from single models to intelligent agent ecosystems and from static knowledge to dynamic cognition — marking the beginning of a reasoning revolution. Nano_GraphRAG is ideal for those interested in GraphRAG, offering simplicity, ease of use, and customizability, making it a strong alternative for developers.

If this article might be helpful to your friends, please forward it to them.

agreed, it looks like fake project. he hide full project files, so we can not and check if it is correct

Hi bro can you make this code runnable we can't find a requirement txt it will be a help to us brother