Hybrid RAG + Fine-Tuning Your Dataset Python Project: Easy AI/Chat for your Docs

fine-tuning refers to further adjusting based on the already trained model so that your model’s output can better meet your expectations. We do not need to retrain a new model through fine-tuning,

When we find that AI cannot answer our questions correctly, the way we thought of earlier is to use retrieval to make the retrieved content a reference for AI so that the content of its reply can be what we want. This is RAG. In addition to RAG, there is another method called model fine-tuning.

Fine-tuning is a technique for teaching a trained model new tasks with little effort, where an already-trained model is adjusted to fit new data.

Let’s say you want to create a model that answers exam questions about science. However, it isn’t easy to collect many exam questions. Suppose you already have a model that has learned a lot of general questions and answers. This model can answer general exam questions but cannot answer questions for your specific subject or focus area.

That’s where fine-tuning comes in. You collect a small set of questions and answers related to your specific subject or focus area, then add that data to the trained model and retrain it. The model will adjust to the new data and become capable of answering exam questions tailored to your subject.

The advantage of fine-tuning is that it requires less new data. If you already have a trained model, you can use its knowledge to adapt to new tasks. The challenge with fine-tuning lies in deciding how much to adjust. Adjusting too much will cause you to lose original knowledge; adjusting too little may cause you to adapt better to new tasks.

In this story, we’ll introduce fine-tuning and provide a clear overview of supervised fine-tuning, LoRA, and QLoRA techniques. Then, we will guide you step-by-step on how to customize your dataset to fine-tune the Llama 3.1 8B model on Google Colab. After fine-tuning, we’ll show you how to evaluate the model’s performance to ensure it’s ready for use. Finally, we’ll walk you through adding HybridRAG to your fine-tuning process to achieve even better results

Before we start! 🦸🏻♀️

If you like this topic and you want to support me:

like my article; that will really help me out.👏

Follow me on my YouTube channel

Subscribe to me to get the latest article.

What is Fine-Tuning :

In machine learning, fine-tuning refers to further adjusting based on the already trained model so that your model’s output can better meet your expectations. We do not need to retrain a new model through fine-tuning, which saves us the high cost of training a new model.

Why Fine-tuning The Model?

When the data set is minimal (several thousand images), training an extensive neural network with tens of millions of parameters from scratch is unrealistic because the more significant the model, the more data it requires, and overfitting is inevitable. At this time, if you still want to use the super feature extraction capabilities of an extensive neural network, you can only rely on fine-tuning the already trained model.

It can reduce training costs: If you export feature vectors for transfer learning, the subsequent training costs will be very low. There will be no pressure to use the CPU, and it can be done without a deep learning machine.

The model's predecessors have spent a lot of effort to train and are likely more potent than those you build from scratch, so there is no need to reinvent the wheel.

What is Supervised Fine-Tuning (SFT)

Instead, we can build on existing knowledge through supervised fine-tuning, specializing in large language models (LLMs) for particular tasks or domains using labeled data sets. This approach teaches models specific tasks, resulting in greater precision and performance for targeted applications. In supervised fine-tuning, the model is trained on a labeled dataset, where both the input examples and their corresponding correct outputs are presented.

The following figure shows an example of a typical instruction, including a system prompt for guiding the model, a user prompt for providing a task, and the expected output the model generates. High-quality open-source instruction datasets are in the 💾 LLM Datasets GitHub repository.

But SFT also has limitations. It works best when it leverages the base model’s knowledge, and learning utterly new information (such as an unknown language) is more complex and can easily lead to hallucinations. If it is a new domain that the base model still needs to be aware of, continuous pre-training on the original dataset should be performed first.

Additionally, an already fine-tuned instruction model is often close to your needs. For example, a model might perform well in performance but indicate that OpenAI or Meta, not you trained it. In this case, you can adjust its behavior through preference alignment. By providing 100–1000 instruction examples for selection and rejection, you can make the LLM claim to be trained by you, not OpenAI.

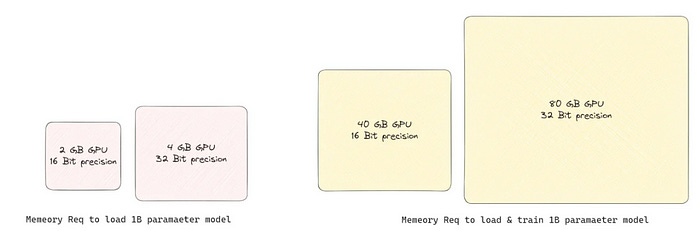

As of this year, some progress has been made, resulting in larger models. However, tuning such a huge open-source model on standard systems is only feasible with specialized optimization techniques.

Full Fine-Tuning

Full fine-tuning is the most direct SFT technique. It usually brings the best results by retraining all parameters of the pre-trained model on the instruction dataset. Still, it requires a lot of computing resources (fine-tuning an 8B model requires multiple high-end GPUs). Since the entire model is modified, full fine-tuning can easily cause the model to forget previous skills and knowledge.

Fine-tuning these LLMs on downstream datasets can achieve huge performance improvements.

However, full fine-tuning becomes infeasible on consumer hardware as models get bigger. Furthermore, storing and deploying fine-tuned models independently for each downstream task is very expensive since the fine-tuned model is the same size as the original pre-trained model. The Parameter-Efficient Fine-Tuning (PEFT) method aims to solve both problems!

Parameter Efficient Fine-tuning (PEFT)

PEFT Finetuning is parameter-efficient fine-tuning, a set of fine-tuning techniques that allow you to fine-tune and train your model more efficiently than normal training.

PEFT techniques usually reduce the number of trainable parameters in a neural network. The most famous and commonly used PEFT techniques are Prefix Tuning, P-tuning, LoRA, etc. Lora is the most widely used one. There are many variants of LoRA, such as QLoRA and LongLoRA, and they all have their applications.

Low-Rank Adaptation (LoRA)

its millions to billions of parameters, making it challenging for ordinary users to complete this task. LoRA, however, enables fine-tuning without the need to adjust all parameters on a large scale. Specifically, LoRA updates only a portion of the model’s parameters, structured as low-rank matrices. This approach significantly reduces the number of parameters that need to be updated, saving time and resources.

Let’s Imagine you are decorating a room in your home. Often, you might consider repainting walls, replacing flooring, rearranging furniture, etc., but doing so can be time-consuming and laborious. If you want to change the style of the room, you can choose to change only the curtains and carpet (just like LoRA only adjusts some parameters of the model). This way, you can still give a room a new look without having to do a major renovation.

Quantization-aware Low-Rank Adaptation (QLoRA)

While LoRA is a game changer in reducing storage requirements, it still requires a powerful GPU to train the model. It is where QLoRA (or Quantitative LoRA) steps in, combining LoRA with quantization to form a more innovative approach.

QLoRA is an optimized version of LoRA, which combines quantization technology to make the fine-tuning process more efficient. Quantization technology converts floating point data in the model (usually 32-bit or 16-bit floating point numbers) into smaller data types (such as 4-bit or 8-bit), which can reduce memory usage and speed up calculations. QLoRA also quantifies the model while performing LoRA fine-tuning, making the entire fine-tuning process more resource-saving.

Continuing with the previous example, let’s say you want to save time, energy, and money. You might replace curtains and carpets with affordable but similar-looking materials, such as cheap but durable fabric instead of expensive silk or imitation wood flooring instead of solid wood flooring (this is like quantization technology in QLoRA). In this way, you not only achieve the purpose of changing the style of the room but also further optimize the cost and resource usage.

Choose the open-source fine-tuning framework

As far as I know, three popular and widely used open-source frameworks are currently available. These three frameworks are Axolotl, Unsloth, and LlamaFactory.

Well, Today, I’m going to introduce you to a tool called Unsloth, a poor man’s fine-tuning solution.

‘Unsloth’ is a neologism that means not lazy, it is used to praise people who constantly strive and develop themselves.

As the name suggests, the Unsloth developers are constantly working to improve the performance and efficiency of fine-tuning.

But interestingly enough, the mascot is a sloth, a symbol of slowness.

The reason I say ‘poor man’s fine-tuning solution’ is because Unsloth provides a way to fine-tune LLM with limited resources, even on consumer graphics cards like GTX.

This could be a valuable solution for many users who have yet to be able to directly tune LLM due to limited GPU resources or low GPU model quality.

Additionally, you can increase the learning rate for more productive fine-tuning.

Unsloth’s Strengths

Unsloth is a tool for optimizing the fine-tuning of LLMs. Here, ‘optimization’ means reducing the memory usage required for fine-tuning and increasing the learning speed.

It is receiving good feedback from users for the following features:

Increased learning speed

Unsloth provides significant learning speed improvements compared to traditional learning techniques.

Although performance may vary depending on the LLM model and GPU type, it outperforms existing learning techniques in most combinations.

Reduce memory usage

Unsloth actively utilizes memory efficiency techniques to minimize the memory usage required for learning.

This allows even large models requiring multiple GPUs to be fine-tuned on a single GPU.

Compatibility with various hardware and hugging face ecosystems

Unsloth supports most NVIDIA GPUs, from the GTX 1070 to the latest H100, and is compatible with huggingface libraries such as Datasets, SFTTrainer, DPOTrainer, and PPOTrainer.

This allows users to leverage their existing familiar development environment.

Fine-Tuning LLama3.1 — 8B

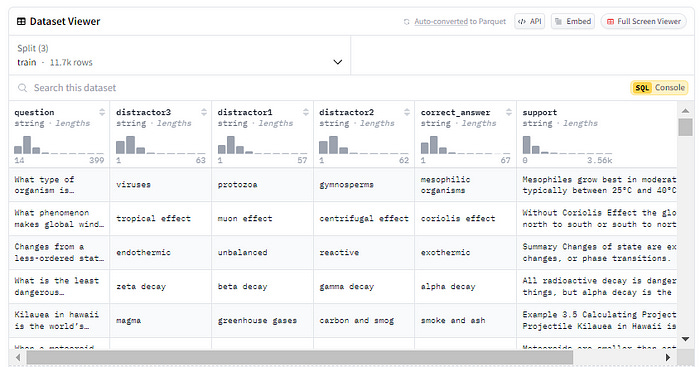

If you still remember, we discussed the exam questions at the beginning of the story. Since I am interested in science, I would like to choose the popular dataset Science Question, which contains 13,679 crowdsourced science exam questions about Physics, Chemistry, and Biology, among others. The dataset is available on Kaggle and Huggingface.

But before we get into fine-tuning the model, let’s first demonstrate LLama3.1–8 B’s ability to generate the initial reasoning output. I will explore the dataset, copy the question, and paste it into the LLama3.1–8B chatbot. Let’s see if the model can answer the question correctly.

question:

The formation of an amalgam allows the metal to react with what?Correct answer :

The metals of group 13 (Al, Ga, In, and Tl) are all reactive. However,

passivation occurs as a tough, hard, thin film of the metal oxide forms

upon exposure to air. Disruption of this film may counter the passivation,

allowing the metal to react. One way to disrupt the film is to expose the

passivated metal to mercury. Some of the metal dissolves in the mercury to

form an amalgam, which sheds the protective oxide layer to expose the metal

to further reaction. The formation of an amalgam allows the metal to react

with air and water. Final answer : air and waterLLama3.1–8B Before Fine-tuning

The LLaMA model answered the question incorrectly because it focused on the formation of the amalgam rather than the subsequent chemical reactions the metal undergoes after the amalgam is formed. And the correct answer is air and water.

another question :

What organ systems link exchange surfaces with cells throughout the body?Correct answer :

42.1 Circulatory systems link exchange surfaces with cells throughout

the body. Final Answer :circulatory LLama3.1–8B Before Fine-tuning

The organ systems that link exchange surfaces with cells throughout the body are:

Circulatory System (Cardiovascular System): The circulatory system consists of the heart, arteries, veins, and blood vessels. It delivers oxygenated blood to cells and organs and picks up deoxygenated blood, carrying it back to the heart for oxygenation and removal of waste products.

Respiratory System: The respiratory system consists of the nose, mouth, trachea, bronchi, lungs, and diaphragm. It takes in oxygen from the air and expels carbon dioxide through gas exchange in the alveoli of the lungs.

Nervous System: The nervous system consists of the brain, spinal cord, and peripheral nerves. It enables communication between the brain and other parts of the body, facilitating the exchange of chemical signals (neurotransmitters) between nerve cells (neurons).

Gastrointestinal System (Digestive System): The gastrointestinal system consists of the mouth, esophagus, stomach, small intestine, and large intestine. It absorbs nutrients from food, eliminating waste products, and helps maintain the body's fluid balance.

Urinary System (Renal System): The urinary system consists of the kidneys, ureters, bladder, and urethra. It filters waste products from the blood, regulating electrolyte levels, and eliminating waste in the form of urine.

These organ systems work together to maintain homeostasis, regulating the exchange of essential substances (such as oxygen, glucose, amino acids, hormones, and ions) between the external environment and cells throughout the body.Data Preparation for Fine-tuning

The quality of the data you feed into the model impacts the model’s result and overall success. One way to ensure this is by gathering a dataset that reflects the problem being solved. The data should be accurate, relevant, complete, and free from bias. Even the best machine learning models and algorithms will struggle to produce meaningful results if the data contains inaccuracies, irrelevant features, missing values, or biases. You should also note how the data is tokenized and formatted based on the input format the particular LLM you are fine-tuning requires.

As mentioned, we will use a high-quality science questions answer dataset containing 13,679 crowdsourced science exam questions. I had to create a function to extract more than 100 rows.

from datasets import load_dataset

# Load the dataset (example using the 'imdb' dataset)

dataset = load_dataset('question')

# Select only the first 100 samples

small_dataset = dataset['train'].select(range(100))

# Specify the columns you want (example: 'text', 'label', 'id')

columns_to_keep = ['question','correct_answer', 'support'] # Change these to match the column names of your dataset

# Remove other columns and keep only the selected ones

small_dataset = small_dataset.remove_columns([col for col in small_dataset.column_names if col not in columns_to_keep])

# Save the selected 100 samples with the specific columns as a JSON file

small_dataset.to_json('dataset.json')Then, I checked the dataset to ensure it was clean and had no missing values. I manually customized the JSON file. Inside the file, there’s a long list of dictionaries. Each one has the system prompt I want the model to respond to, along with the input prompt and the response as the output. I took the question and correct answer from the original dataset and wrote the system prompt’s instructions.

{

"instruction":"You are a scientist. I will provide a question, and you will respond with the result based on your scientific knowledge. Answer the question using the provided context. If the question cannot be answered with the given information, respond with 'I don't know.",

"question":"In which stage does the chromatin condense into chromosomes?",

"correct_answer":"In prophase II, once again the nucleolus disappears and the nucleus breaks down. The chromatin condenses into chromosomes. The spindle begins to reform as the centrioles move to opposite sides of the cell. Final answer : prophase ii"

},Format Data

Formatting data is one of the most important steps when training an LLM on custom data. We need to format the JSON file so Llama3 can understand. It is similar to how people interact with LLMs. To do this, we add special tokens to show when a message starts and ends. These tokens are <|im_start|> and <|im_end|> to show who’s talking.

Let me show you how the format should look. If you remember how we structured the JSON file, it has three keys, and we’ll use them in this format.

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

##{instruction}##

<|eot_id|><|start_header_id|>user<|end_header_id|>

##{question}##

<|eot_id|><|start_header_id|>assistant<|end_header_id|>

##{correct_answer}##

We then load and process the entire dataset to apply the chat template to every conversation and push it to Hugging Face. [dataset], and don’t forget to change huggingface_user to your ID name.

huggingface_user = "GaoDalie"

dataset_name = "ScienceQA"

class Llama3InstructDataset:

def __init__(self, data):

self.data = data

self.prompts = []

self.create_prompts()

def create_prompt(self, row):

prompt = f"""<|begin_of_text|><|start_header_id|>system<|end_header_id|>{row['instruction']}<|eot_id|><|start_header_id|>user<|end_header_id|>{row['question']}<|eot_id|><|start_header_id|>assistant<|end_header_id|>{row['correct_answer']}<|eot_id|>"""

return prompt

def create_prompts(self):

for row in self.data:

prompt = self.create_prompt(row)

self.prompts.append(prompt)

def get_dataset(self):

df = pd.DataFrame({'prompt': self.prompts})

return df

def create_dataset_hf(dataset):

dataset.reset_index(drop=True, inplace=True)

return DatasetDict({"train": Dataset.from_pandas(dataset)})

if __name__ == "__main__":

with open('/content/dataset_QA.json', 'r') as f:

data = json.load(f)

dataset = Llama3InstructDataset(data)

df = dataset.get_dataset()

processed_data_path = 'processed_data'

os.makedirs(processed_data_path, exist_ok=True)

llama3_dataset = create_dataset_hf(df)

llama3_dataset.save_to_disk(os.path.join(processed_data_path, "llama3_dataset"))

llama3_dataset.push_to_hub(f"{huggingface_user}/{dataset_name}")Dataset :

Setup configuration :

Model Configuration :

"model_config": {

"base_model":"unsloth/llama-3-8b-Instruct-bnb-4bit", # The base model

"finetuned_model":"llama-3-8b-Instruct-bnb-4bit-gaodalie-demo", # The finetuned model

"max_seq_length": 2048, # The maximum sequence length

"dtype":torch.float16, # The data type

"load_in_4bit": True, # Load the model in 4-bit

},base_model: specifies the pre-trained model to use as the base model for fine-tuning.finetuned_model: specifies the finetuned model to use for fine-tuning.finetuned_name: specifies the name of the fine-tuned model.max_seq_length: specifies the maximum sequence length that the model can process.dtype: specifies the data type for the model's weights and activations.Nonemeans auto-detection, which will choose the most suitable data type based on the hardware.load_in_4bit: specifies whether to load the model with 4-bit precision, which can reduce memory usage and improve performance.

LoRA Configuration :

"lora_config": {

"r": 16, # The number of LoRA layers 8, 16, 32, 64

"target_modules": ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj"], # The target modules

"lora_alpha":16, # The alpha value for LoRA

"lora_dropout":0, # The dropout value for LoRA

"bias":"none", # The bias for LoRA

"use_gradient_checkpointing":True, # Use gradient checkpointing

"use_rslora":False, # Use RSLora

"use_dora":False, # Use DoRa

"loftq_config":None # The LoFTQ configuration

},r: specifies the number of LoRA layers to use.target_modules: specifies the modules to which LoRA should be applied.lora_alpha: specifies the alpha value for LoRA, which controls the strength of the LoRA layers.lora_dropout: specifies the dropout value for LoRA, which controls the random dropping of neurons during training.bias: specifies the bias for LoRA, which can be set to "none" or a specific value.use_gradient_checkpointing: specifies whether to use gradient checkpointing, which can reduce memory usage during training.use_rsloraanduse_dora: specify whether to use RSLora and DoRa, which are variants of LoRA.loftq_config: specifies the LoFTQ configuration, which is not used in this example.

Training Configuration :

"training_config": {

"per_device_train_batch_size": 2, # The batch size

"gradient_accumulation_steps": 4, # The gradient accumulation steps

"warmup_steps": 5, # The warmup steps

"max_steps":0, # The maximum steps (0 if the epochs are defined)

"num_train_epochs": 10, # The number of training epochs(0 if the maximum steps are defined)

"learning_rate": 2e-4, # The learning rate

"fp16": not torch.cuda.is_bf16_supported(), # The fp16

"bf16": torch.cuda.is_bf16_supported(),, # The bf16

"logging_steps": 1, # The logging steps

"optim" :"adamw_8bit", # The optimizer

"weight_decay" : 0.01, # The weight decay

"lr_scheduler_type": "linear", # The learning rate scheduler

"seed" : 42, # The seed

"output_dir" : "outputs", # The output directory

}per_device_train_batch_size: specifies the batch size to use for training.gradient_accumulation_steps: specifies the number of steps to accumulate gradients before updating the model.warmup_steps: specifies the number of warmup steps to perform before starting training.max_steps: specifies the maximum number of steps to train for. If set to 0, the model will train for the specified number of epochs.num_train_epochs: specifies the number of epochs to train for.learning_rate: specifies the initial learning rate to use for training.fp16andbf16: specify whether to use 16-bit floating-point precision (fp16) or 16-bit bfloat precision (bf16) for training.logging_steps: specifies the number of steps to log training metrics.optim: specifies the optimizer to use for training.weight_decay: specifies the weight decay rate to use for regularization.lr_scheduler_type: specifies the learning rate scheduler to use.seed: specifies the random seed to use for training.output_dir: specifies the output directory to save training results.

Training Dataset :

"training_dataset":{

"name":f"{huggingface_user}/{dataset_name}", # The dataset name(huggingface/datasets)

"split":"train", # The dataset split

"input_field":"prompt", # The input field

},name: This specifies the name of the dataset. In this case, it's GaoDalie/ScienceQA, a dataset hosted on the Hugging Face Hub. The format is username/dataset_name.

split: This specifies the split of the dataset to use for training. In this case, it's set to "train", which means the model will be trained on the training split of the dataset.

input_field: This specifies the primary input field of the dataset. In this case, it's set to "text". This field will likely be the text input the model will process.

Loading The Model :

Now, let’s load the model. Since we plan to use QloRA, I chose the pre-quantized `llama-3–8b-Instruct-bnb-4bit`, which downloads faster.

When loading the model, you need to specify a maximum sequence length parameter, which determines the context window size of the model. In this example, we set it to 2,048 because more extended contexts increase computation and memory consumption.

# Loading the model and the tokinizer for the model

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = config.get("model_config").get("base_model"),

max_seq_length = config.get("model_config").get("max_seq_length"),

dtype = config.get("model_config").get("dtype"),

load_in_4bit = config.get("model_config").get("load_in_4bit"),

)LoRA (Low-Rank Adaptation) is a low-rank adapter technology for large language models. Unlike all parameters, during the model fine-tuning process, only about 1% to 10% of the entire model parameters are updated. This way, effective model fine-tuning and optimization are achieved, improving the model’s performance on specific tasks.

# Setup for QLoRA/LoRA peft of the base model

model = FastLanguageModel.get_peft_model(

model,

r = config.get("lora_config").get("r"),

target_modules = config.get("lora_config").get("target_modules"),

lora_alpha = config.get("lora_config").get("lora_alpha"),

lora_dropout = config.get("lora_config").get("lora_dropout"),

bias = config.get("lora_config").get("bias"),

use_gradient_checkpointing = config.get("lora_config").get("use_gradient_checkpointing"),

random_state = 42,

use_rslora = config.get("lora_config").get("use_rslora"),

use_dora = config.get("lora_config").get("use_dora"),

loftq_config = config.get("lora_config").get("loftq_config"),

)Loading the training dataset

dataset_train = load_dataset(config.get("training_dataset").get("name"), split = config.get("training_dataset").get("split"))training parameters

Now we can start configuring the training parameters. I will briefly describe some of the key hyperparameters:

Learning rate: It determines how quickly the model updates its parameters. If the learning rate is too low, training progress will be faster and may get stuck in a locally optimal solution; if it is too high, training may become unstable or even diverge, thereby reducing model performance.

Learning rate scheduler: It dynamically adjusts the learning rate during training, usually using a higher learning rate in the early stages to make rapid progress and gradually reduce it. Linear and cosine scheduling are the two most common schemes.

Batch size: The number of samples processed before each weight update. Larger batches generally lead to more stable gradient estimates and faster training but require more memory. Gradient accumulation can accumulate gradients over multiple forward/backward passes to achieve a larger effective batch size.

Training epochs: The number of times the model traverses the entire training set. More training epochs allow the model to learn the data more fully, which may lead to better performance. However, too many training epochs may lead to overfitting.

Optimizer: An algorithm that adjusts model parameters to minimize a loss function. It is generally recommended to use the 8-bit AdamW: it performs comparably to the 32-bit version with a smaller memory footprint. The paging version of AdamW only makes sense in a distributed training environment.

Weight decay: A regularization technique that adds a penalty for large weights to the loss function to prevent overfitting and help the model learn simpler and more generalizable features. However, excessive weight decay may inhibit model learning.

Warm-up steps: gradually increase the learning rate from a low value to the initial set value at the beginning of training. Warm-up helps stabilize early training, especially when using large learning rates or batches, by allowing the model to gradually adapt to the data distribution before making large updates.

Data Packing: A batch has a predefined sequence length. To improve efficiency, we can pack multiple smaller samples into one batch.

# Setting up the trainer for the model

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset_train,

dataset_text_field = config.get("training_dataset").get("input_field"),

max_seq_length = config.get("model_config").get("max_seq_length"),

dataset_num_proc = 2,

packing = False,

args = TrainingArguments(

per_device_train_batch_size = config.get("training_config").get("per_device_train_batch_size"),

gradient_accumulation_steps = config.get("training_config").get("gradient_accumulation_steps"),

warmup_steps = config.get("training_config").get("warmup_steps"),

max_steps = config.get("training_config").get("max_steps"),

num_train_epochs= config.get("training_config").get("num_train_epochs"),

learning_rate = config.get("training_config").get("learning_rate"),

fp16 = config.get("training_config").get("fp16"),

bf16 = config.get("training_config").get("bf16"),

logging_steps = config.get("training_config").get("logging_steps"),

optim = config.get("training_config").get("optim"),

weight_decay = config.get("training_config").get("weight_decay"),

lr_scheduler_type = config.get("training_config").get("lr_scheduler_type"),

seed = 42,

output_dir = config.get("training_config").get("output_dir"),

),

)Once the model is trained, then we save the trained model. From the LoRA and QLoRA parts, we didn’t train the whole model, but an adapter module. In Unsloth, there are three ways to save: lorasaves only the adapter, merged_16bit and merged_4bit save the adapter and model together in 16-bit or 4-bit precision. Then, we push our model to a hugging face. Please feel free to check it out [link]

model.save_pretrained_gguf(config.get("model_config").get("finetuned_model"), tokenizer, quantization_method = "q4_k_m")Congratulations, we have fine-tuned a model! Now, let’s test the fine-tuned model and check the results; then, we compare them with the previous output by asking the chatbot the same question as before

from llama_cpp import Llama

from langchain_community.llms import LlamaCpp

from langchain_core.callbacks import CallbackManager, StreamingStdOutCallbackHandler

from langchain_core.prompts import PromptTemplate

my_model_path = "/content/llama-3-8b-Instruct-bnb-4bit-gaodalie-1-demo/unsloth.Q4_K_M.gguf"

CONTEXT_SIZE = 512

llama_model = Llama(model_path=my_model_path,

n_ctx=CONTEXT_SIZE)

template = """ You are a scientist. I will provide a question, and you will respond with the result based on your scientific knowledge. Answer the question using the provided context. If the question cannot be answered with the given information, respond with 'I don't know

Question: {question}

Answer:"""

prompt = PromptTemplate.from_template(template)

callback_manager = CallbackManager([StreamingStdOutCallbackHandler()])

my_model_path = "/content/llama-3-8b-Instruct-bnb-4bit-gaodalie-1-demo/unsloth.Q4_K_M.gguf"

# 初始化 LLM 设置

llm = LlamaCpp(

model_path=my_model_path,

temperature=0.75,

max_tokens=300,

top_p=1,

callback_manager=callback_manager,

verbose=True,

)

question = """

Question: What organ systems link exchange surfaces with cells throughout the body?

"""

llm.invoke(question)Question 1 :

The formation of an amalgam allows the metal to react with what?After Fine-tuning the model

A. Air\nB. Water\nC. Mercury\nD. The surrounding tooth material

\n\nAnswer: D. The surrounding tooth material\nThe formation of an amalgam

allows the metal to react with the surrounding tooth material, which is

mostly dentin. The mercury in the amalgam reacts with the copper in the

alloy to form a stable compound that prevents further corrosion.

The resulting amalgam is strong and durable, making it well suited for

use in dental restorations such as fillings and inlays. In addition,

amalgams are also used in crowns, bridges, and dentures. Amalgams have

been used in dentistry for over 150 years, and they continue to be a

popular choice among dentists due to their durability, strength, and

ease of use. However, some people may experience adverse effects from the

mercury in amalgams, such as oral or gastrointestinal symptoms, or

neurological problems. As a result, some dentists may choose to avoid

using amalgams in their practice, or they may take steps to minimize

exposure to mercury for themselves and their patients. In addition, many

dental schools and organizations are working to educate dentists about the

safe use of amalgams and to promote the development of alternative restorative

materials that do not contain mercury. The ADA has also developed guidelines

for the safe handling and disposal of amalgam waste. These guidelines

recommend that dentists take steps to minimize exposure to mercury, such

as wearing gloves when handling amalgam, washing hands thoroughly after

handling'Question 2 :

What organ systems link exchange surfaces with cells throughout the body?After Fine-tuning the model

A. circulatory system\nB. respiratory system\nC. skeletal system

\nD. integumentary system\n\nAnswer: A. circulatory system

\nThe circulatory system, also known as the cardiovascular system, is

responsible for moving blood throughout the body. It links exchange

surfaces with cells throughout the body. The main organs of the circulatory

system are the heart, arteries, veins, and blood vessels. The heart pumps

blood throughout the body. Arteries carry oxygenated blood away from the heart.

Veins return deoxygenated blood to the heart. Blood vessels include both

arteries and veins. The circulatory system is responsible for delivering

oxygen and nutrients to cells and organs throughout the body. It also picks up

waste products and returns them to the excretory system for elimination.

In addition, the circulatory system plays a key role in maintaining body

temperature, as it helps to distribute heat generated by metabolic processes

throughout the body. The circulatory system is also involved in the transport

of immune cells, such as white blood cells, throughout the body. These immune

cells play a crucial role in defending the body against pathogens and other

foreign substances. In summary, the circulatory system is responsible for

delivering oxygen and nutrients to cells and organs throughout the body.

It also picks up waste products and returns them to the excretory system for

elimination. The circulatory system plays a key role in maintaining body

temperature, as it helps to distribute heat generated by metabolic processes

throughout the body.Experiments and Results:

In this study, I want to evaluate the performance of large language models (LLMs) after and before fine-tuning. I will use G-eval, BERTScore and human feedback to assess the model’s performance.

In our experiments, we aim to answer the following questions:

RQ1: What organ systems link exchange surfaces with cells throughout the body?

RQ2: The formation of an amalgam allows the metal to react with what?

User-as-a-judge (human feedback) :

Question 1: As is shown before-fine-tuning output fails to focus on the original topic, while the after-fine-tuning output shifts to an unrelated context about dental amalgams, ultimately missing the core question.

Question 2: The evidence shows that fine-tuning significantly improved the model’s performance. Before fine-tuning, the model provided excessive information, including irrelevant information. After fine-tuning, the model gave the correct and concise answer, directly addressing the circulatory system and its role.

LLM-as-a-judge :

in this part, we delve into the evaluation techniques using simple examples. We explore evaluation methods like G-eval and BertScore.

Evaluating using G-Eval.

G-Eval is a framework for evaluating the quality of NLG output using [CoT (Chain-of-Thought)], which has been devised.

Since G-Eval does not rely on references, it can [* assess the quality of content based solely on the input prompt and the generated text], and has shown the highest correlation with human evaluations compared to other metrics.

It produces scores from 1 to 5 based on four criteria:

Relevance: Evaluate whether the correct output contains only important information and excludes unnecessary details.

Coherence: Assesses the logical flow and structure of the correct output.

Consistency: Verifies that the correct output aligns with the facts of the original document.

Fluency: Evaluate the grammar and readability of the correct output.

EVALUATION_PROMPT_TEMPLATE = """

You will be given one actual output for the expected_output. Your task is to rate the actual output on one metric.

Please make sure you read and understand these instructions very carefully.

Please keep this expected output open while reviewing, and refer to it as needed.

Evaluation Criteria:

{criteria}

Evaluation Steps:

{steps}

Example:

Source Text:

{expected_output}

Actual Output:

{actual_output}

Evaluation Form (scores ONLY):

- {metric_name}

"""

# Metric 1: Relevance

RELEVANCY_SCORE_CRITERIA = """

Relevance(1-5) - selection of important content from the expected output. \

The actual output should include only important information from the expected output. \

Annotators were instructed to penalize expected output which contained redundancies and excess information.

"""

RELEVANCY_SCORE_STEPS = """

1. Read the summary and the source document carefully.

2. Compare the summary to the source document and identify the main points of the article.

3. Assess how well the summary covers the main points of the article, and how much irrelevant or redundant information it contains.

4. Assign a relevance score from 1 to 5.

"""

# Metric 2: Coherence

COHERENCE_SCORE_CRITERIA = """ Coherence - the collective quality of all sentences in the actual output based on the expected output

"""

COHERENCE_SCORE_STEPS = """

1. Read the expected output carefully and identify the main topic and key points.,

2. Read the actual output and compare it to the expected output. Check if the actual output covers the main topic and key points of the expected output,and if it presents them in a clear and logical order.,

3. Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest based on the Evaluation Criteria.

"""

# Metric 3: Consistency

CORRECTNESS_SCORE_CRITERIA = """ Determine whether the actual output is factually correct based on the expected output.

"""

CORRECTNESS_SCORE_STEPS = """

1. Read the actual output carefully,

2. Compare the actual output to the expected output and identify the main points of the expected out,

3. Assess how well the actual output the main points of the expected output, and how much irrelevant or redundant information it contains.,

4. Assign a relevance score from 1 to 5.For each of these criteria, a prompt is created that uses the original document and summary as inputs and leverages [CoT (Chain-of-Thought)] to make the model output a numerical score from 1 to 5 for each criterion. The defined prompt generates scores, which are compared across the output.

def highlight_max(s):

is_max = s == s.max()

return [

"font-weight: bold" if v else "" # No background, just bold the max value

for v in is_max

]

def get_geval_score(

criteria: str, steps: str, expected_output: str, actual_output: str, metric_name: str

):

prompt = EVALUATION_PROMPT_TEMPLATE.format(

criteria=criteria,

steps=steps,

expected_output=expected_output, # Correct placeholder

actual_output=actual_output, # Correct placeholder

metric_name=metric_name,

)

response = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

temperature=0,

max_tokens=5,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

)

return response.choices[0].message.content

evaluation_metrics = {

"Relevance": (RELEVANCY_SCORE_CRITERIA, RELEVANCY_SCORE_STEPS),

"Coherence": (COHERENCE_SCORE_CRITERIA, COHERENCE_SCORE_STEPS),

"Correctness": (CORRECTNESS_SCORE_CRITERIA, CORRECTNESS_SCORE_STEPS),

}

summaries = {"before_fine_tuning": before_fine_tuning, "after_fine_tuning": after_fine_tuning}

data = {"Evaluation Type": [], "Summary Type": [], "Score": []}

for eval_type, (criteria, steps) in evaluation_metrics.items():

for summ_type, summary in summaries.items():

data["Evaluation Type"].append(eval_type)

data["Summary Type"].append(summ_type)

result = get_geval_score(criteria, steps, expected_output, summary, eval_type)

score_num = int(result.strip())

data["Score"].append(score_num)

pivot_df = pd.DataFrame(data, index=None).pivot(

index="Evaluation Type", columns="Summary Type", values="Score"

)

styled_pivot_df = pivot_df.style.apply(highlight_max, axis=1)

display(styled_pivot_df)GPT-4 is used with a direct scoring function that generates discrete scores (1–5) for each metric. By normalizing the scores and taking a weighted sum, a more robust and continuous score can be obtained, better reflecting the quality and diversity of the summaries.

Evaluating using BERTScore

We use BERTScore to evaluate text generation. BERTScore provides precision, recall, and F1 scores, allowing users to comprehensively understand their models’ performance.

Its scores lie between 0 and 1, with 0 representing no semantic similarity and 1 representing a perfect semantic match between candidate and reference texts on three criteria.

F1 Score: evaluation metric that measures a model’s accuracy

Precision: measures the accuracy of the retrieved items

Recall: measures how many relevant items were correctly retrieved

from bert_score import BERTScorer

# Instantiate the BERTScorer object for English language

scorer = BERTScorer(lang="en")

# Calculate BERTScore for the summary 1 against the excerpt

# P1, R1, F1_1 represent Precision, Recall, and F1 Score respectively

P1, R1, F1_1 = scorer.score([before_fine_tuning], [expected_output])

# Calculate BERTScore for summary 2 against the excerpt

# P2, R2, F2_2 represent Precision, Recall, and F1 Score respectively

P2, R2, F2_2 = scorer.score([after_fine_tuning], [expected_output])

print("before_fine_tuning F1 Score:", F1_1.tolist()[0])

print("after_fine_tuning 2 F1 Score:", F2_2.tolist()[0])

print("before_fine_tuning Precision:", P1.tolist()[0])

print("after_fine_tuning 2 Precision:", P2.tolist()[0])

print("before_fine_tuning Recall:", R1.tolist()[0])

print("after_fine_tuning 2 Recall:", R2.tolist()[0])Results Table:

For Question 1, As you see the fine-tuning significantly improved relevance while maintaining coherence and correctness. The F1 Score, precision, and recall improved slightly, suggesting a small but overall improvement in the model’s performance.

For Question 2, despite small improvements in F1 Score, precision, and recall after fine-tuning, the model still struggled with coherence, correctness, and relevance, indicating that further fine-tuning or modifications are needed to enhance the model performance.

Hybrid RAG + Fine-tuning

Hybrid systems blend the benefits of RAG and fine-tuning in a single pipeline:

The retriever provides fast access to expansive, fresh external data.

Fine-tuning deeply specializes in model behavior for the domain.

The generator produces outputs utilizing both external context and fine-tuned domain knowledge.

Benefits of Hybrid RAG + Finetuning

Key advantages of combined systems include:

Reduced hallucinations through external grounding.

Domain adaptation from fine-tuning on niche data.

Scalability by expanding retriever data vs. retraining.

Speed of fine-tuned model directly generating text.

Retrieval relevance improved via fine-tuned reader.

No compromise on fresh data or domain fit.

I developed the hybrid RAG system and implemented the corrective RAG algorithm as an external system. I used a low-relevance knowledge base to test the external system and fine-tune the model’s performance, as I didn’t intend to retrieve data from the knowledge base.

If you want to know more about the Corrective Rag, please check these well-researched articles; I have explained them better.

loading Model

from huggingface_hub import hf_hub_download

model_rpo = "GaoDalie/llama-3-8b-Instruct-bnb-4bit-gaodalie-demo"

model_file = "unsloth.Q4_K_M.gguf"

local_dir = "Models"

download_file = hf_hub_download(repo_id=model_rpo,filename=model_file,local_dir=local_dir)

print(f"model download to: {download_file}")Import relevant libraries

import os

import sys

from dotenv import load_dotenv

from langchain.prompts import PromptTemplate

from langchain_core.pydantic_v1 import BaseModel, Field

import json

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.llms import LlamaCpp

from typing import List , Tuple

sys.path.append(os.path.abspath(os.path.join(os.getcwd(), '..'))) # Add the parent directory to the path sicnce we work with notebooks

from langchain_community.document_loaders import PyPDFLoader

from langchain_community.vectorstores import FAISS

from langchain_community.tools import DuckDuckGoSearchResults

from langchain_huggingface import HuggingFaceEmbeddingsDefine files path

Model_Path = 'Models/unsloth.Q4_K_M.gguf'

path = "/content/Lecture8.pdf"Create a vector store

vectorstore = encode_pdf(path)Initialize Embeddings Model

embed_model = HuggingFaceEmbeddings(model_name="BAAI/bge-small-en-v1.5")Initialize search tool

search = DuckDuckGoSearchResults()Define retrieval evaluator, knowledge refinement and query rewriter LLM chains

# Retrieval Evaluator

class RetrievalEvaluatorInput(BaseModel):

relevance_score: float = Field(..., description="The relevance score of the document to the query. the score should be between 0 and 1.")

def retrieval_evaluator(query: str, document: str) -> float:

prompt = PromptTemplate(

input_variables=["query", "document"],

template="On a scale from 0 to 1, how relevant is the following document to the query? Query: {query}\nDocument: {document}\nRelevance score:"

)

dict_schema = convert_to_openai_tool(RetrievalEvaluatorInput)

print("Prompt:", prompt)

print("Schema:", dict_schema)

chain = prompt | llm.with_structured_output(dict_schema)

input_variables = {"query": query, "document": document}

print("Input variables:", input_variables)

result = chain.invoke(input_variables)

print(f"Result from chain: {result}")

relevance_score = result['relevance_score']

return relevance_score

# Knowledge Refinement

class KnowledgeRefinementInput(BaseModel):

key_points: str = Field(..., description="The document to extract key information from.")

def knowledge_refinement(document: str) -> List[str]:

prompt = PromptTemplate(

input_variables=["document"],

template="Extract the key information from the following document in bullet points:\n{document}\nKey points:"

)

chain = prompt | llm.with_structured_output(KnowledgeRefinementInput)

input_variables = {"document": document}

result = chain.invoke(input_variables).key_points

return [point.strip() for point in result.split('\n') if point.strip()]

# Web Search Query Rewriter

class QueryRewriterInput(BaseModel):

query: str = Field(..., description="The query to rewrite.")

def rewrite_query(query: str) -> str:

prompt = PromptTemplate(

input_variables=["query"],

template="Rewrite the following query to make it more suitable for a web search:\n{query}\nRewritten query:"

)

chain = prompt | llm.with_structured_output(QueryRewriterInput)

input_variables = {"query": query}

return chain.invoke(input_variables).query.strip()Helper function to parse search results

from langchain_core.tools import Tool

from langchain_community.utilities import SerpAPIWrapper

# Initialize the SerpAPIWrapper with your API key

search = SerpAPIWrapper(serpapi_api_key=api_key)

def top5_results(query: str) -> List:

"""

Retrieve the search results for a given query

and return them directly.

Args:

query (str): The search query to execute.

Returns:

List: The search results returned by the SerpAPIWrapper.

"""

# Use the run method and return the results directly

results = search.run(query) # Pass only the query

print("Retrieved search results:", results) # Debugging line

return results # Return the raw results

# Define the tool

tool = Tool(

name="Google Search Snippets",

description="Search Google for recent results.",

func=top5_results,

)Define sub-functions for the CRAG process

def retrieve_documents(query: str, faiss_index: FAISS, k: int = 3) -> List[str]:

"""

Retrieve documents based on a query using a FAISS index.

Args:

query (str): The query string to search for.

faiss_index (FAISS): The FAISS index used for similarity search.

k (int): The number of top documents to retrieve. Defaults to 3.

Returns:

List[str]: A list of the retrieved document contents.

"""

docs = faiss_index.similarity_search(query, k=k)

return [doc.page_content for doc in docs]

def evaluate_documents(query: str, documents: List[str]) -> List[float]:

"""

Evaluate the relevance of documents based on a query.

Args:

query (str): The query string.

documents (List[str]): A list of document contents to evaluate.

Returns:

List[float]: A list of relevance scores for each document.

"""

return [retrieval_evaluator(query, doc) for doc in documents]

def perform_web_search(query: str) -> Tuple[List[str], List[Tuple[str, str]]]:

"""

Perform a web search based on a query.

Args:

query (str): The query string to search for.

Returns:

Tuple[List[str], List[Tuple[str, str]]]:

- A list of refined knowledge obtained from the web search.

- A list of tuples containing titles and links of the sources.

"""

rewritten_query = rewrite_query(query)

web_results = search.run(rewritten_query)

web_knowledge = knowledge_refinement(web_results)

sources = parse_search_results(web_results)

return web_knowledge, sources

def generate_response(query: str, knowledge: str, sources: List[Tuple[str, str]]) -> str:

"""

Generate a response to a query using knowledge and sources.

Args:

query (str): The query string.

knowledge (str): The refined knowledge to use in the response.

sources (List[Tuple[str, str]]): A list of tuples containing titles and links of the sources.

Returns:

str: The generated response.

"""

response_prompt = PromptTemplate(

input_variables=["query", "knowledge", "sources"],

template="Based on the following knowledge, answer the query. Include the sources with their links (if available) at the end of your answer:\nQuery: {query}\nKnowledge: {knowledge}\nSources: {sources}\nAnswer:"

)

input_variables = {

"query": query,

"knowledge": knowledge,

"sources": "\n".join([f"{title}: {link}" if link else title for title, link in sources])

}

response_chain = response_prompt | llm

return response_chain.invoke(input_variables).contentCRAG process

def crag_process(query: str, faiss_index: FAISS) -> str:

"""

Process a query by retrieving, evaluating, and using documents or performing a web search to generate a response.

Args:

query (str): The query string to process.

faiss_index (FAISS): The FAISS index used for document retrieval.

Returns:

str: The generated response based on the query.

"""

print(f"\nProcessing query: {query}")

# Retrieve and evaluate documents

retrieved_docs = retrieve_documents(query, faiss_index)

eval_scores = evaluate_documents(query, retrieved_docs)

print(f"\nRetrieved {len(retrieved_docs)} documents")

print(f"Evaluation scores: {eval_scores}")

# Determine action based on evaluation scores

max_score = max(eval_scores)

sources = []

if max_score > 0.7:

print("\nAction: Correct - Using retrieved document")

best_doc = retrieved_docs[eval_scores.index(max_score)]

final_knowledge = best_doc

sources.append(("Retrieved document", ""))

elif max_score < 0.3:

print("\nAction: Incorrect - Performing web search")

final_knowledge, sources = perform_web_search(query)

else:

print("\nAction: Ambiguous - Combining retrieved document and web search")

best_doc = retrieved_docs[eval_scores.index(max_score)]

# Refine the retrieved knowledge

retrieved_knowledge = knowledge_refinement(best_doc)

web_knowledge, web_sources = perform_web_search(query)

final_knowledge = "\n".join(retrieved_knowledge + web_knowledge)

sources = [("Retrieved document", "")] + web_sources

print("\nFinal knowledge:")

print(final_knowledge)

print("\nSources:")

for title, link in sources:

print(f"{title}: {link}" if link else title)

# Generate response

print("\nGenerating response...")

response = generate_response(query, final_knowledge, sources)

print("\nResponse generated")

return responseExample query with low relevance to the document

query = "What tells you how much of the food you should eat to get the nutrients listed on the label?"

result = crag_process(query, vectorstore)

print(f"Query: {query}")

print(f"Answer: {result}")query = "What type of ions do ionic compounds contain?"

result = crag_process(query, vectorstore)

print(f"Query: {query}")

print(f"Answer: {result}")query = "How many chambers does the stomach of a crocodile have?"

result = crag_process(query, vectorstore)

print(f"Query: {query}")

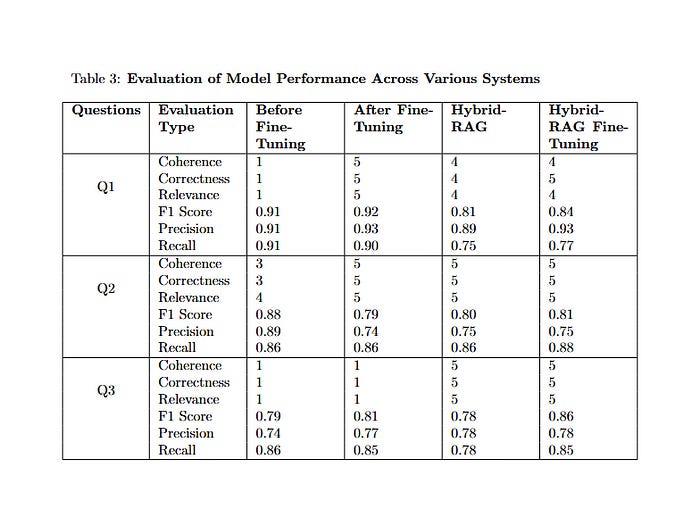

print(f"Answer: {result}")To assess how well each LLM performs, we used a detailed method with three measures: LLM evaluation, where GPT-4o was prompted to give a score of 1 or 5 based on whether the model’s response and the reference answer conveyed the same idea; BERTScore to evaluate the similarity between the text summary and the original text; and human feedback.

We tested on 3 different questions:

RQ1: What tells you how much of the food you should eat to get the nutrients listed on the label?

RQ2: What type of ions do ionic compounds contain?

RQ3: How many chambers does the stomach of a crocodile have?

User-as-a-judge (human feedback) :

Question 1: As you can see, Before fine-tuning, the model generated information about Daily Value instead of the correct details on serving size, revealing a need for more clarity. After fine-tuning, it improved by providing the proper information about serving size, but it still needed key details like the serving unit and calorie count. The HybridRAG model gave minimal but accurate responses, and after fine-tuning, it provided a better answer by adding context on serving size, though it remained less detailed than expected.

Question 2: As you may have noticed, Before fine-tuning, the model didn’t generate enough detail and missed mentioning positive and negative ions, which were the main focus of the question. It also generated unrelated information, showing signs of hallucination; after fine-tuning, it improved by thoroughly explaining positive and negative ions, but it included extra information that wasn’t necessary. The HybridRAG model generated an answer that was technically correct but too complicated and lacked clarity; after fine-tuning, the HybridRAG model produced a clearer and more relevant response, although it still contained some unnecessary details beyond the expected answer.

Question 3: As you may know, the model didn’t generate accurate information before fine-tuning. It incorrectly said that crocodiles lack chambered stomachs, which showed it didn’t understand the topic. After fine-tuning, the response still missed the mark by generating the impression that crocodiles don’t have stomachs at all, ignoring the critical fact that they have two chambers. The HybridRAG model did mention the correct number of chambers but didn’t explain enough for better understanding. After fine-tuning, the HybridRAG model clearly stated that crocodiles have two chambers in their stomach, which was much closer to the expected answer but could still use more explanation.

LLM-as-a-judge :

Results Table:

Question 1: As we can see, fine-tuning significantly enhances performance across all metrics for both configurations. The fine-tuned model achieves excellent coherence, correctness, and relevance scores, while Hybrid-RAG shows moderate improvement after fine-tuning, particularly in correctness and precision. Despite these improvements, Hybrid-RAG still underperforms compared to the fine-tuned model in coherence, relevance, and overall retrieval effectiveness, highlighting that fine-tuning enhances Hybrid-RAG. Still, it reaches a different level of performance than the fine-tuned model.

Question 2: From what is presented, the fine-tuning leads to substantial improvements across all evaluation types for both the standard and Hybrid-RAG models, particularly in qualitative measures such as Coherence, Correctness, and Relevance. After fine-tuning, the Hybrid-RAG model shows a balanced increase in both precision and recall, with higher precision than recall, emphasizing its ability to return highly relevant responses with occasional gaps in retrieval. Overall, fine-tuning enhances both versions by boosting accuracy and ensuring better adherence to factual and linguistic criteria, with the Hybrid-RAG model performing exceptionally well in precision.

Question 3: As you may notice, the Hybrid-RAG with Fine-Tuning model performs best overall, achieving high coherence, correctness, relevance, and the highest F1 score. Fine-tuning alone only yields minor improvements in precision and F1 score, while Hybrid-RAG without fine-tuning enhances coherence and relevance but shows slight weaknesses in precision and recall. Fine-tuning the Hybrid-RAG effectively addresses these weaknesses, making it the most balanced model across all evaluation metrics.

Conclusion :

The hybrid-RAG with Fine-Tuning represents a significant advancement in model performance, particularly in reducing hallucinations. This technique effectively combines the strengths of different retrieval mechanisms, allowing for more accurate and relevant responses. Implementing hybrid RAG with Fine-Tuning addresses previous weaknesses and enhances overall effectiveness.

References :

https://mlabonne.github.io/blog/posts/2024-07-29_Finetune_Llama31.html

https://github.com/NirDiamant/RAG_Techniques/blob/main/all_rag_techniques/crag.ipynb

https://cookbook.openai.com/examples/evaluation/how_to_eval_abstractive_summarization

🧙♂️ I am an AI Generative expert! If you want to collaborate on a project, drop an inquiry here or Book a 1-on-1 Consulting Call With Me.